Add InstantSearch and Autocomplete to your search experience in just 5 minutes

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

The inviting ecommerce website template that balances bright colors with plenty of white space. The stylized fonts for the headers ...

Search and Discovery writer

Imagine an online shopping experience designed to reflect your unique consumer needs and preferences — a digital world shaped completely around ...

Senior Digital Marketing Manager, SEO

Winter is here for those in the northern hemisphere, with thoughts drifting toward cozy blankets and mulled wine. But before ...

Sr. Developer Relations Engineer

What if there were a way to persuade shoppers who find your ecommerce site, ultimately making it to a product ...

Senior Digital Marketing Manager, SEO

This year a bunch of our engineers from our Sydney office attended GopherCon AU at University of Technology, Sydney, in ...

David Howden &

James Kozianski

Second only to personalization, conversational commerce has been a hot topic of conversation (pun intended) amongst retailers for the better ...

Principal, Klein4Retail

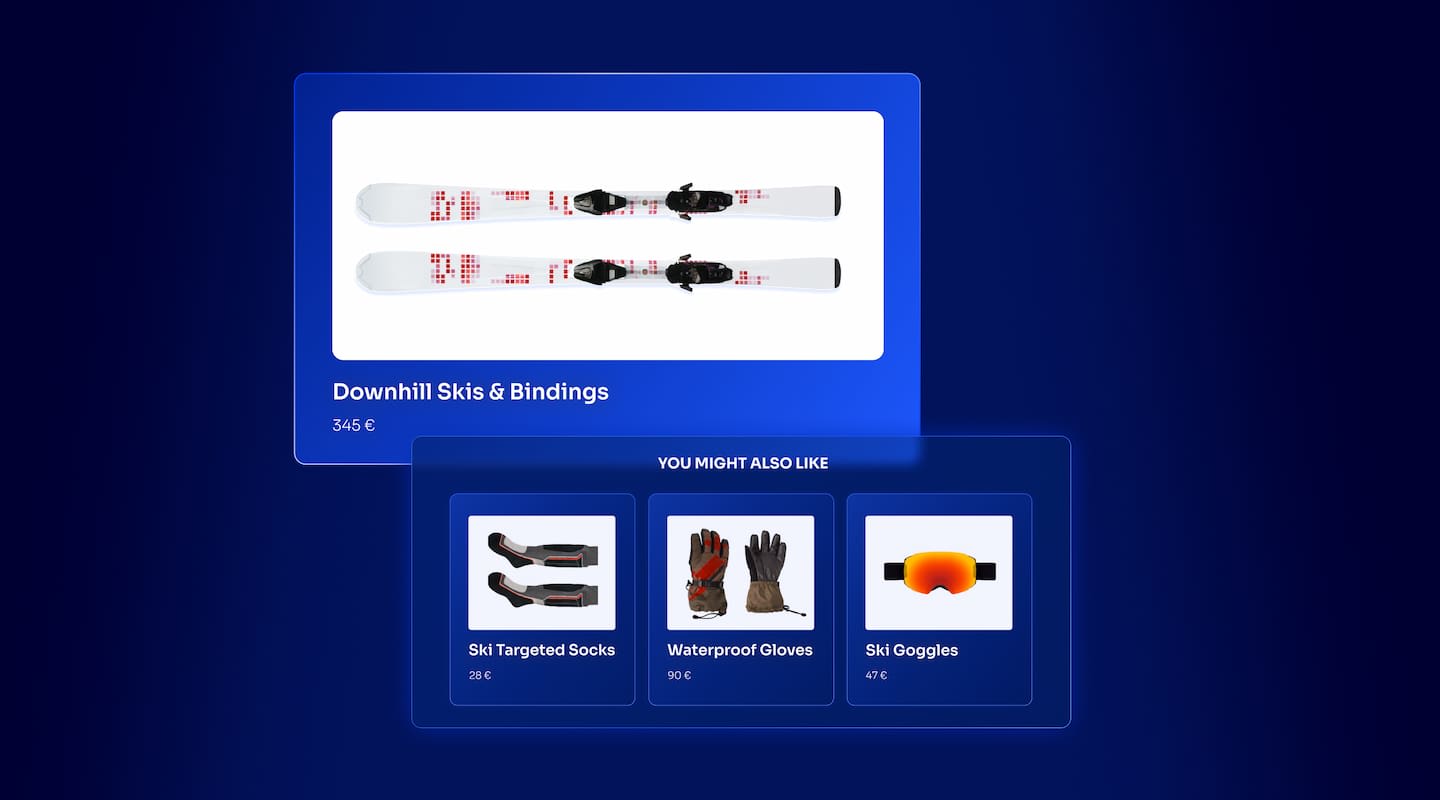

Algolia’s Recommend complements site search and discovery. As customers browse or search your site, dynamic recommendations encourage customers to ...

Frontend Engineer

Winter is coming, along with a bunch of houseguests. You want to replace your battered old sofa — after all, the ...

Search and Discovery writer

Search is a very complex problem Search is a complex problem that is hard to customize to a particular use ...

Co-founder & former CTO at Algolia

2%. That’s the average conversion rate for an online store. Unless you’re performing at Amazon’s promoted products ...

Senior Digital Marketing Manager, SEO

What’s a vector database? And how different is it than a regular-old traditional relational database? If you’re ...

Search and Discovery writer

How do you measure the success of a new feature? How do you test the impact? There are different ways ...

Senior Software Engineer

Algolia's advanced search capabilities pair seamlessly with iOS or Android Apps when using FlutterFlow. App development and search design ...

Sr. Developer Relations Engineer

In the midst of the Black Friday shopping frenzy, Algolia soared to new heights, setting new records and delivering an ...

Chief Executive Officer and Board Member at Algolia

When was your last online shopping trip, and how did it go? For consumers, it’s becoming arguably tougher to ...

Senior Digital Marketing Manager, SEO

Have you put your blood, sweat, and tears into perfecting your online store, only to see your conversion rates stuck ...

Senior Digital Marketing Manager, SEO

“Hello, how can I help you today?” This has to be the most tired, but nevertheless tried-and-true ...

Search and Discovery writer

Technology continues to transform commerce. The most recent example is visual shopping, a fast-growing online functionality that leverages the technologies of image recognition and machine learning to create a powerful visual search-by-image and discovery experience.

Smartphones and online photo sharing, combined with machine learning and image recognition technologies, power visual search. Technically, visual search is called image recognition, or more broadly, computer vision; but more commonly, it’s known as search-by-image and reverse-image search.

Until recently, your choices for building this kind of feature were limited. Both Google and Bing offer a powerful visual image search for merchants. However, with new vector search technology, any company can build image and visual search solutions.

The second part of this article provides an overview of the AI/ML technology behind image recognition, describing the mechanisms that enable a machine to learn what’s inside a photo. We show how a machine breaks down images by their most basic features and then mixes and matches those features to ultimately classify images by similarity. We’ll describe the process of a neural network, or more specifically, the convoluted neural network (CNN), which is so prominent in the image recognition field.

But first we’d like be more visual and show you what visual shopping – a perfect example of practical artificial intelligence – looks like.

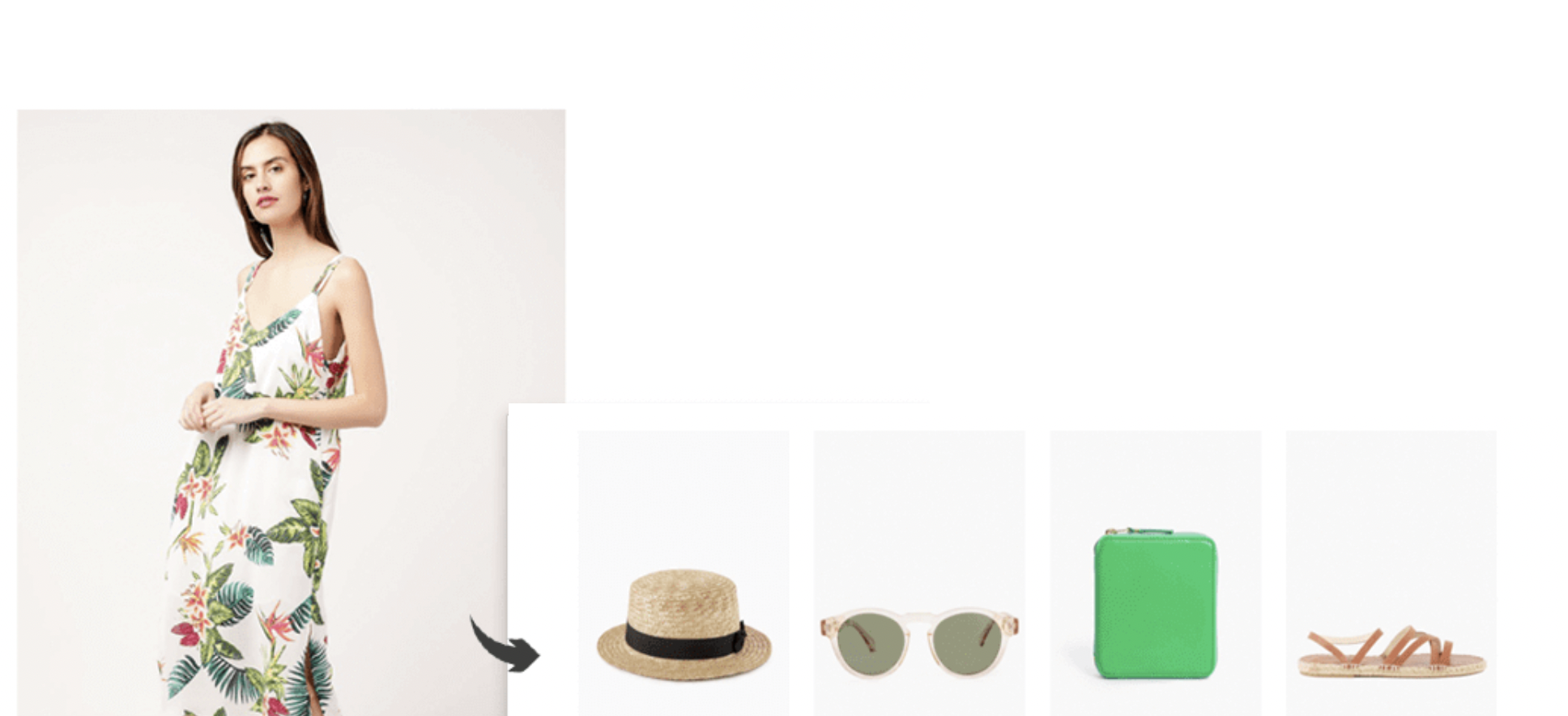

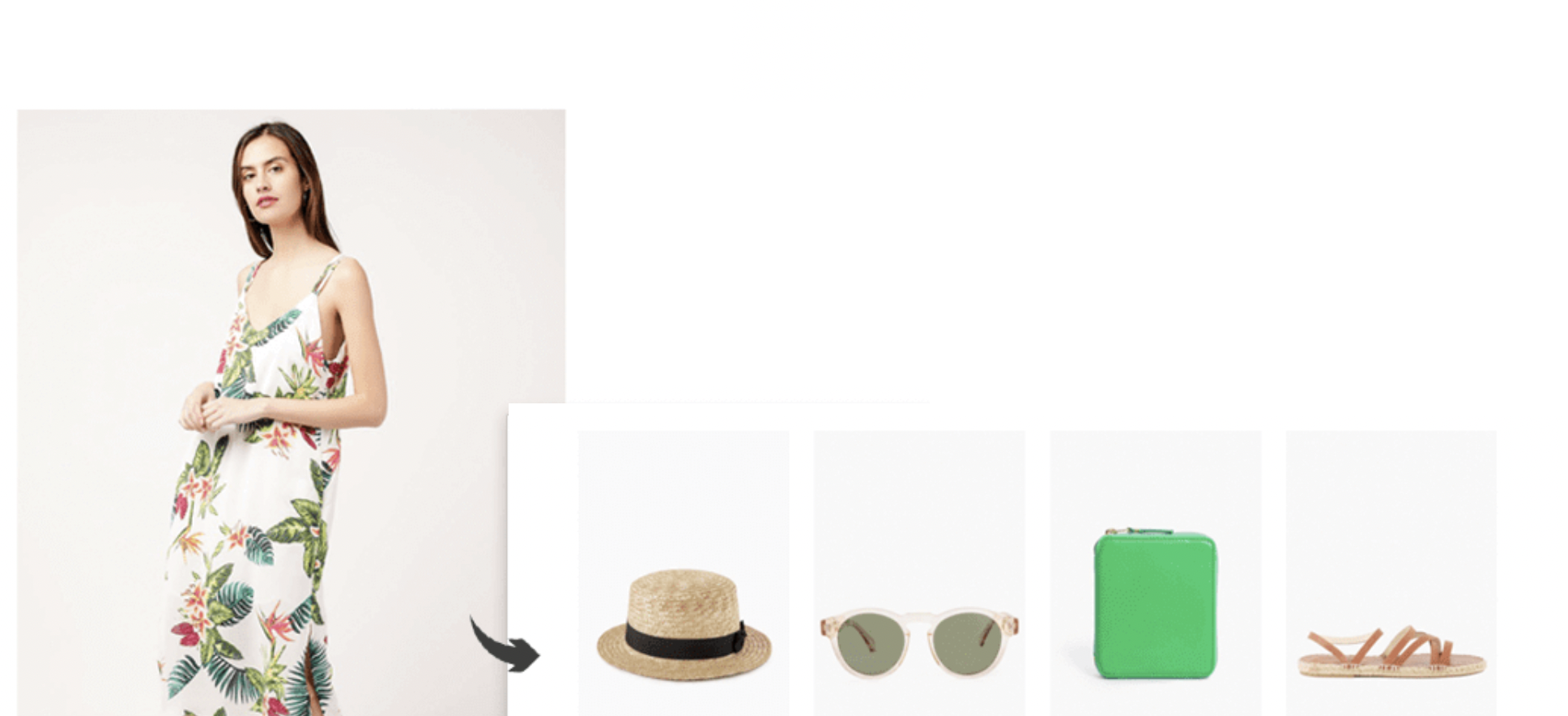

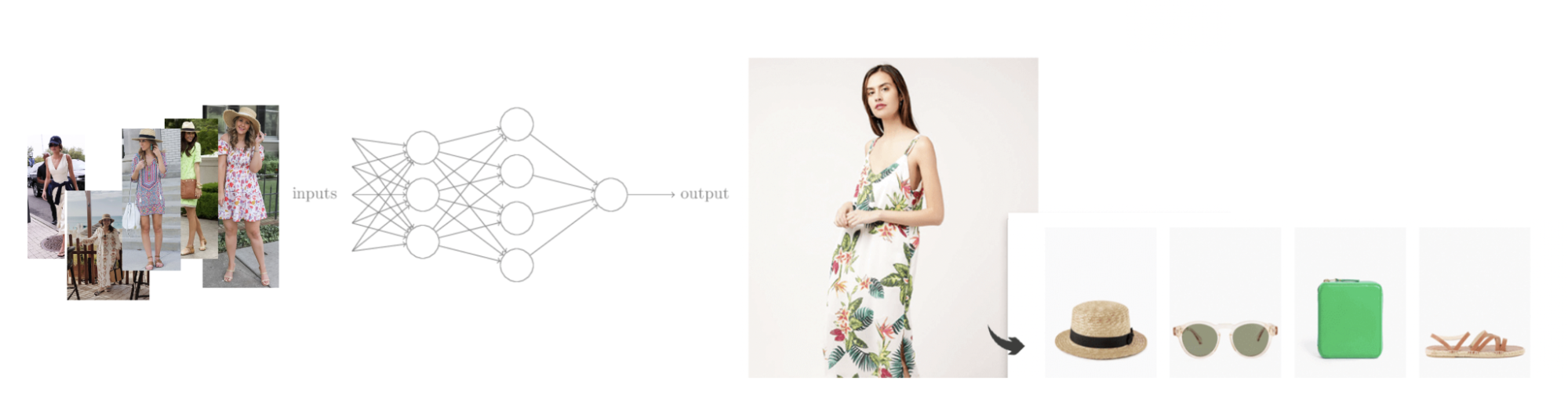

A machine can discover patterns and combinations of products from a vast index of images to produce a Complete the Look feature. A Complete The Look functionality can detect patterns from social media photos and learn which styles of dress, hat, glasses, bag, and shoes show up together. These products become “a look” by the frequency in which they are worn in the real world.

A large number of shared online images in which women wear a particular combination of clothing can be transformed into a look that your online store can propose.

There are several reasons to consider visual shopping a game changer:

At its most basic, image search is an alternative to textual search. Images help users find and browse products with visual examples instead of words. Given the unique ability of a machine to compare two images, image search can produce surprising similarities and recommendations, and can have many real-world applications – not just in commerce. Just to name a mere few outside of ecommerce: doctors comparing x-rays or photos of injuries, architects looking up similar bridges or houses.

The smartphone has become a second eye, and machine learning models can leverage the abundance of the world’s smartphone photos to guide online shoppers to find exactly what they want. It’s not overstating the case to say exactly.

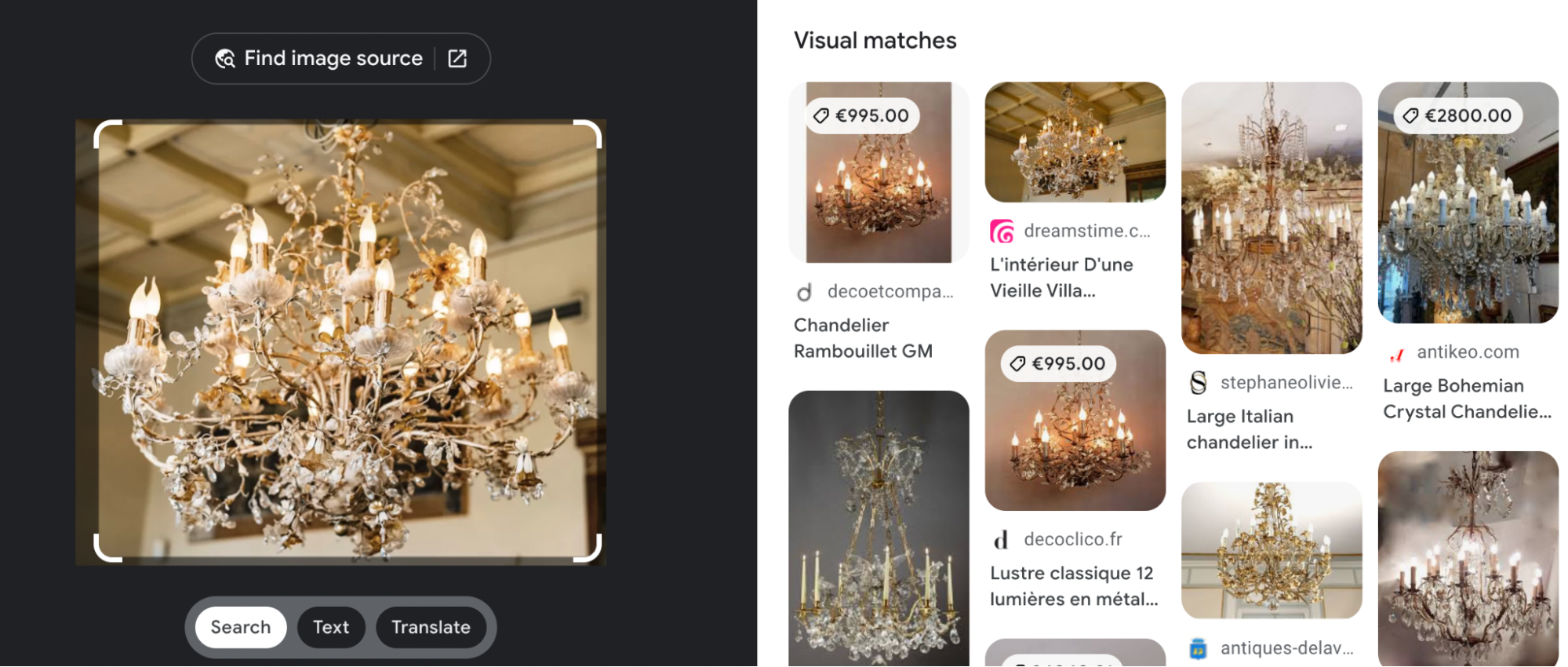

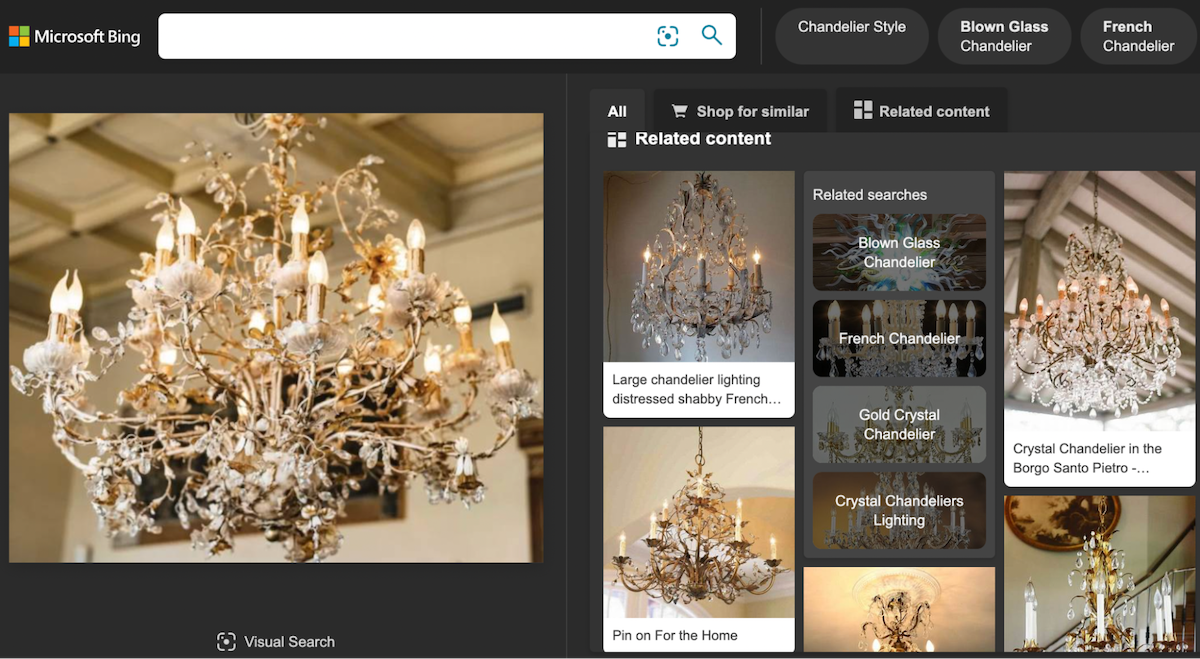

Consider a very old chandelier seen randomly in someone’s home:

Now, the consumer could type out a very detailed description, such as “chandelier gold antique candlecups chains pendalogue”, but this relies on great search skills. They will need to combine common sense words (chandelier, gold, antique) with more technical terms (candlecups, chains, pendalogue). There are several challenges here:

There’s a good chance that such a detailed and perhaps incorrect set of words will get no results – even if the item is available on that website!

Enter images. With image recognition, companies can build applications that match the above photo with all the same photos and descriptions in its search index. The best ML models can achieve high levels of accuracy (92% and 99% in some cases), where every significant detail matters, thus enabling the search engine to detect exact matches:

As you are looking for your chandelier, you may want to find similar styles. Machines don’t only learn to match elements of an image, they can also use those same elements to categorize or cluster images so that they can recommend similar images. Image search expands the possibilities of recommendations.

Bing provides both exact and similar visual shopping. In this image, Bing displays photos that match exactly, but it also gives the user a number of ways to expand their search, with facets (brown glass, French style) and related searches (search for brown, french, crystal, lighting). There’s an important difference between facets and related searches: choosing a facet reduces the current search results to show only those items that match the chosen facet; a related search executes a new search, thereby finding items that your original search might not have retrieved. Image search can bolster the power of these two discovery options.

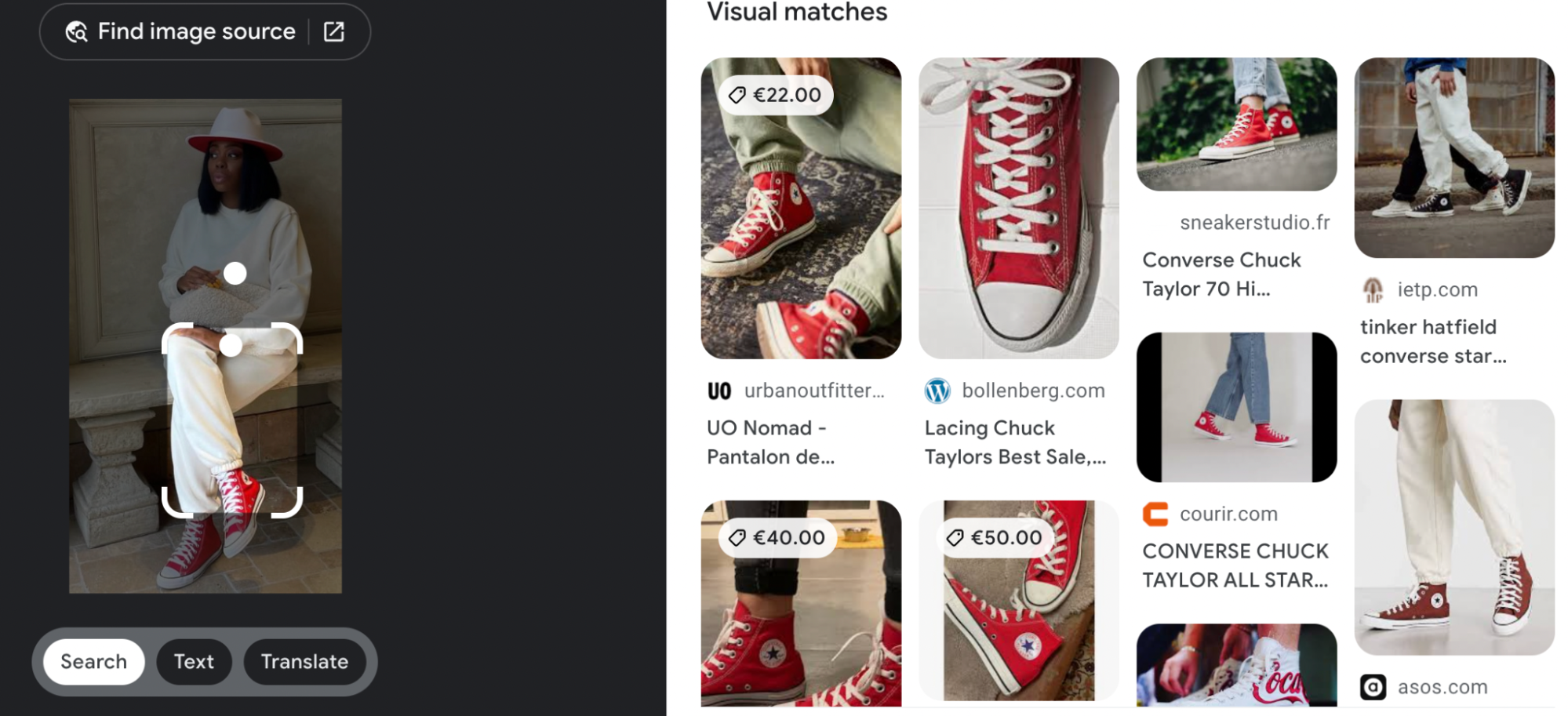

Let’s take the example of fashion. Fashion websites can leverage image recognition in ways that go beyond exact and similarity matching; they can match colors, styles, and categories. Consider a photo of a woman wearing fashionable red sneakers.

Here, Google’s image search found exact matches as well as similar items with different colors and styles.

A technical moment: What’s going on here? In part 2, we’ll get into the details of how machines detect attributes, but the general idea is that people label images to help machines classify images. They need to label not only what something is (a sneaker) but to label its attributes (color, size, shape, context use, and so on). Once a machine learns the attributes of an object, it can feed that information into a recommendations model to produce the above recommendations.

Differentiating objects and their attributes is central to how machines learn. Labelling is one method that does this. But a machine can also differentiate without labeling – by detecting attributes on its own without naming them. For example, a machine can detect a “red” pattern based on pixel values that differentiate red from other colors; and it can start putting “red” objects into a “red” group. It can also group by detecting shapes: shoe pixel patterns differ from other objects, running differs from standing. It can also detect “sneaker” without naming it. And it can then use these pixel patterns to classify all these unnamed objects into groups and recommend similarly shaped or colored items. We’ll discuss this in part 2.

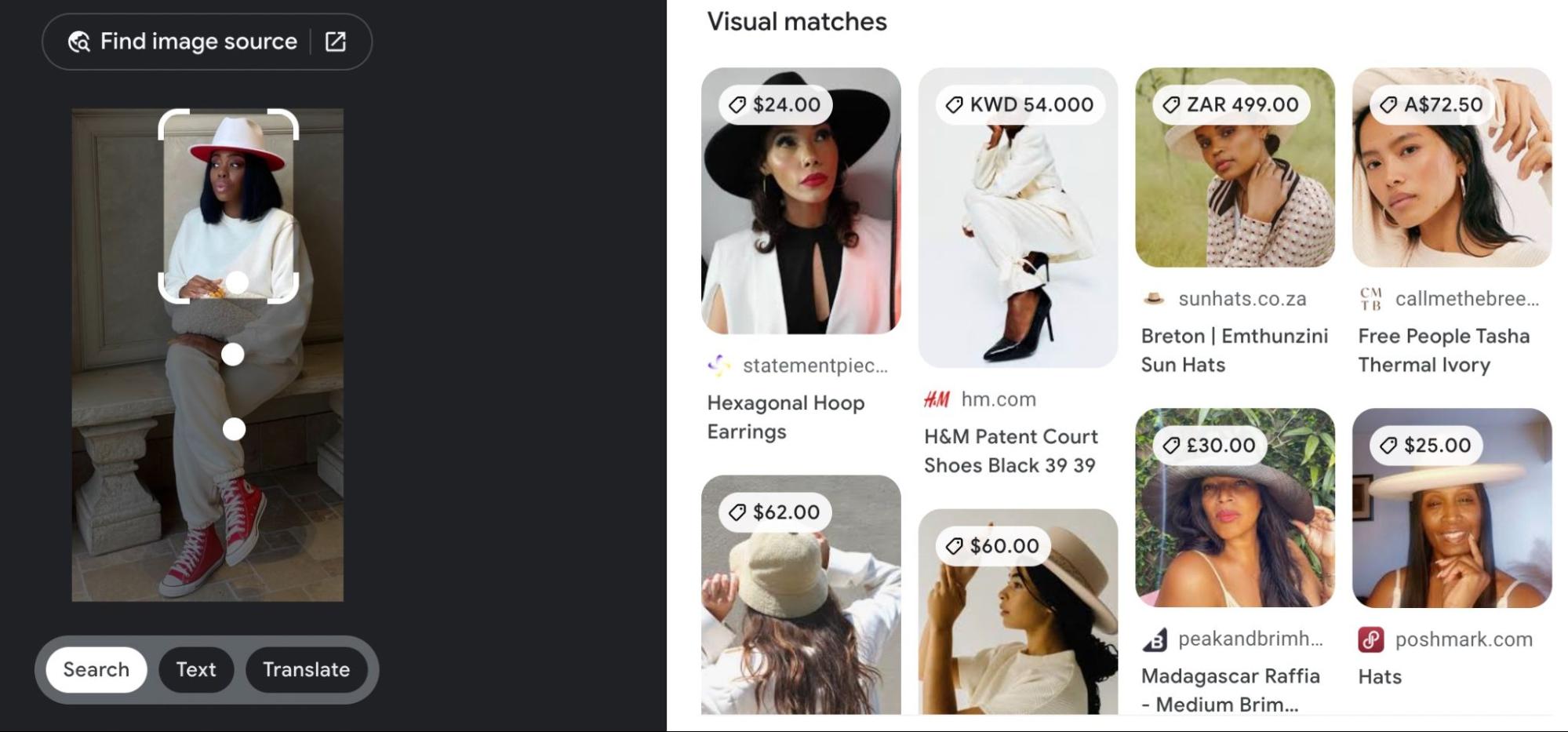

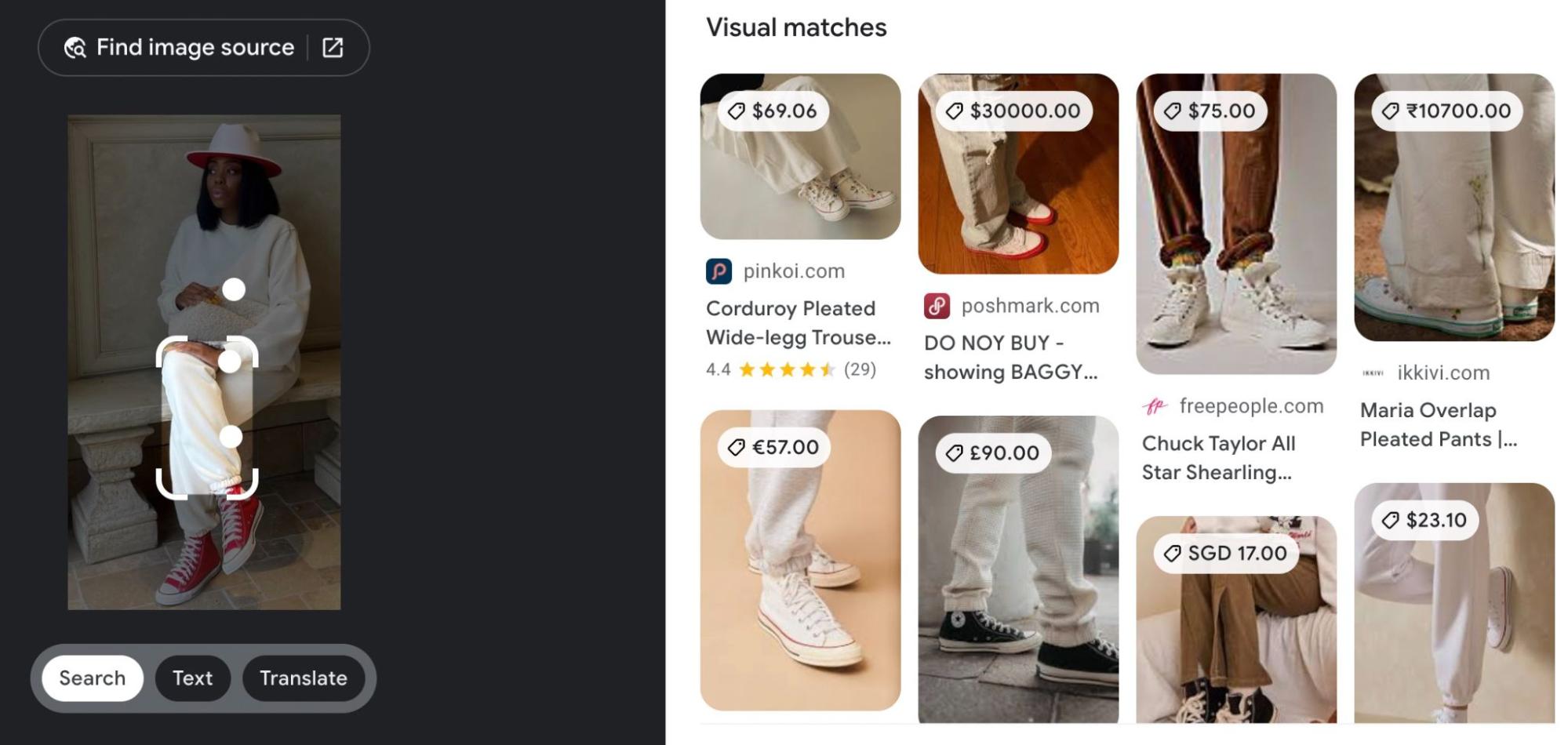

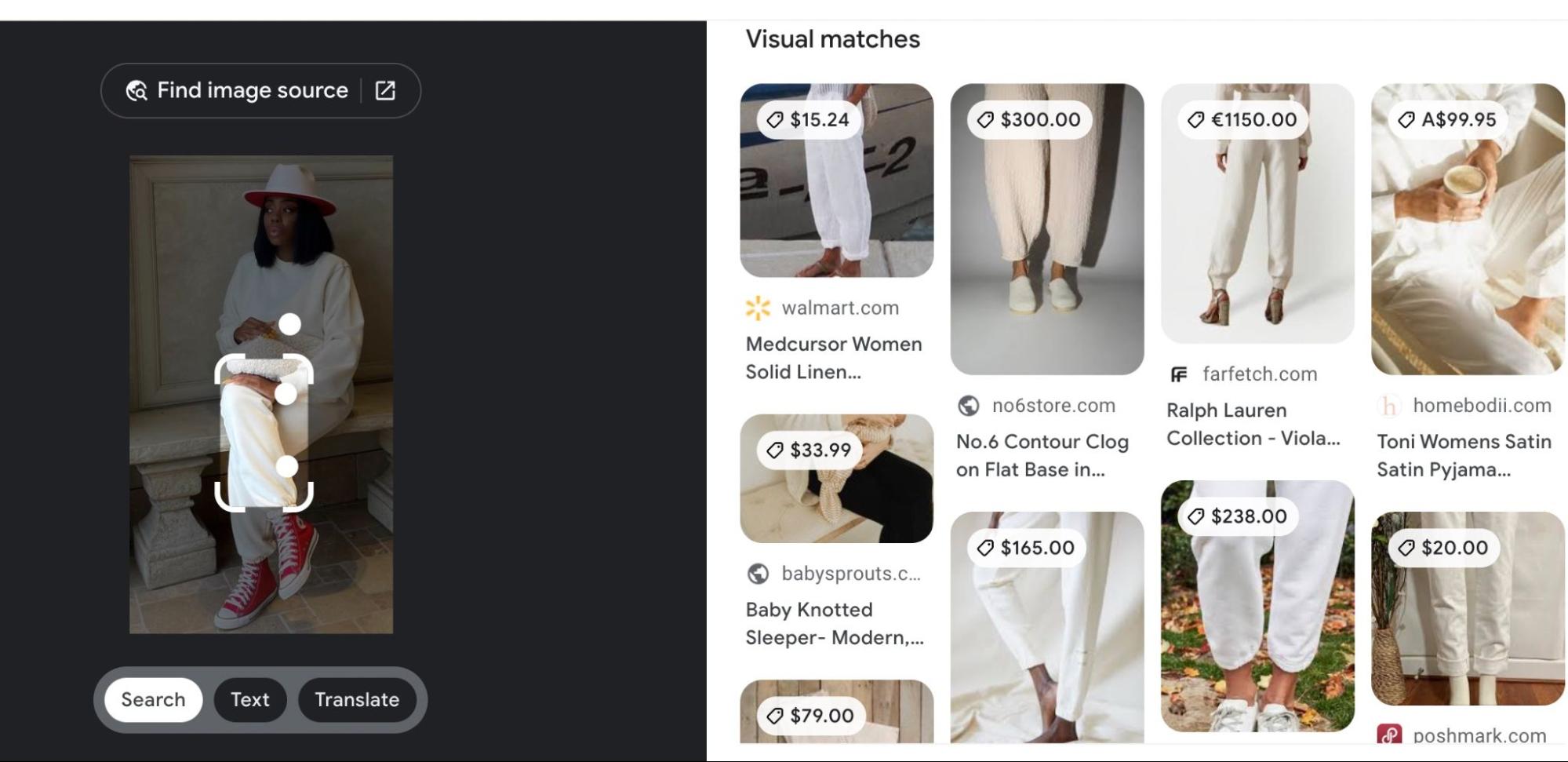

The next example shows us also how a cropping feature can make the search more powerful. By moving the crop, you can switch the search.

By moving the crop, you can start getting offers about combination joggers and sneakers.

Moving the crop one inch higher, you get joggers with a variety of footwear, not the same as above.

As this example illustrates, shopping visually can be a visual discovery process, where a user can move around an image to help them immediately discover the full online catalog.

We saw this image above, which illustrates the idea of completing a look.

The underlying mechanism relies on a techniques already discussed: image classification and attribute extraction, and adds another: detecting multiple objects in images and finding patterns of “frequently worn together”. In other words, if these items frequently appear together in your index of photos, then the machine learns that they constitute a “look”.

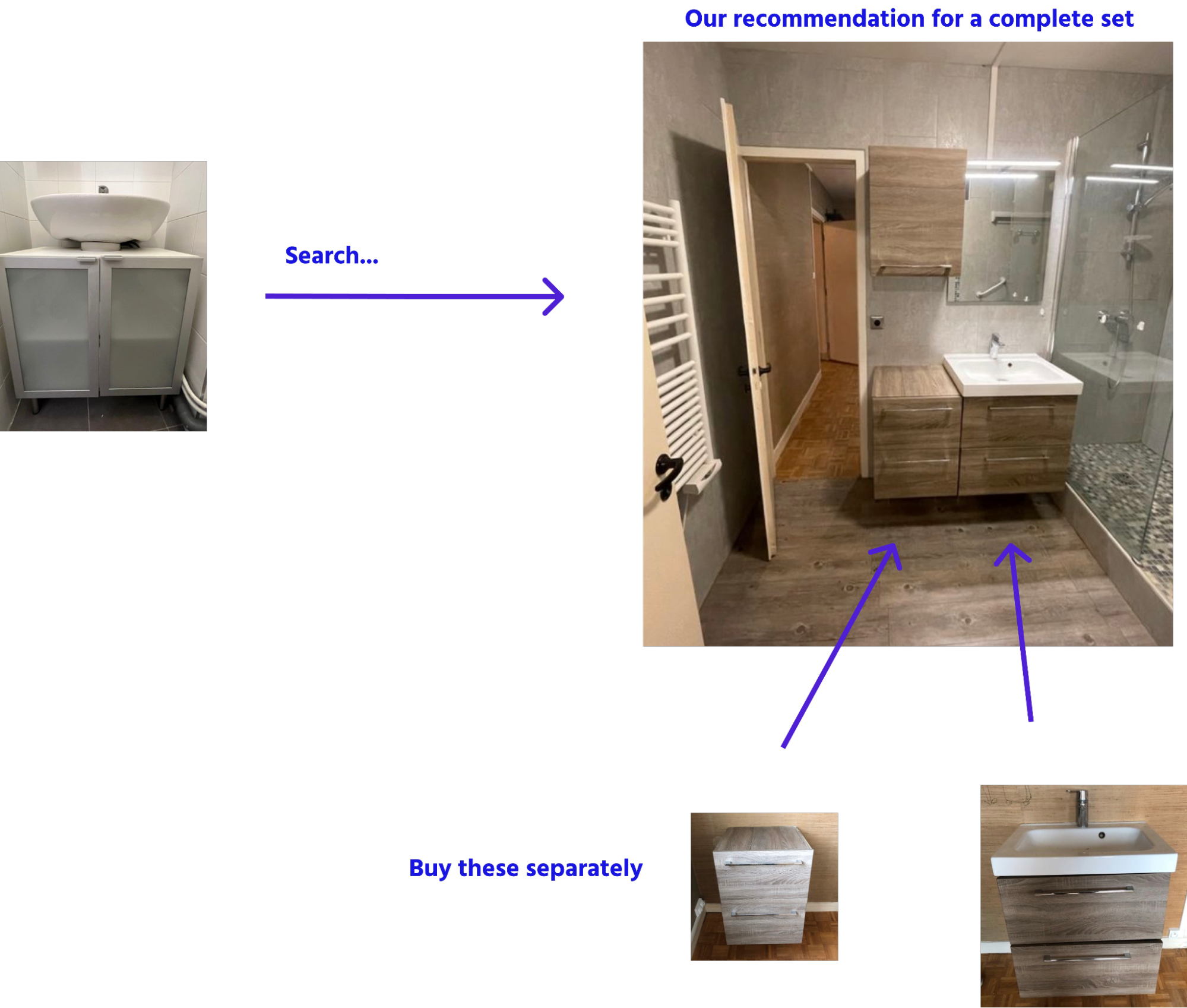

An online home design store can benefit from the same functionality. It can use an image to propose a complete home decoration as well as recommend individual products within the decoration.

You can also go one step further and recommend products that make sense given the context of the image. An image in a nightclub will produce different recommendations than an image in a forest. In this scenario, the background provides another set of clues or attributes for the system to builds its recommendations.

A man goes out dancing and admires the style of a friend’s choice of clothes. He’d like something similar, so he takes a photo and uploads it to a fashion website. The results contain a number of different outfits, all resembling the man’s coat in the image. But the fashion website can also take into account the context of the photo, such as a party or a nightclub. The website can then recommend evening wear. This can be called Context Shopping. The context is the background. If the man were dancing or sitting, maybe the suggestions would change.

Image searching has in no way replaced the search bar. The essence of keyword search – word matching – is still essential to search and discovery. Most searches require only one or a few keywords to find what you’re looking for.

For starters, not everyone owns a smartphone or takes photos. Additionally, the search bar will always be the most intuitive tool for people who prefer words over images and feel that thinking and typing are the best methods for searching and browsing (though voice search can potentially replace typing). Moreover, advances in machine learning and language processing have made keyword search and neural (ML-based) search a powerful hybrid search and discovery experience.

In the end, the two approaches – image and keyword search – make for a powerful combination.

In machine learning, we use the word “feed” for “input” – we feed images into machines to teach them how to see. Well, humans have also fed on images to understand the world. From pre-history to now, humanity’s understanding of its environment began by recognizing only simple shapes, and advanced to more complex object representations. A child follows the same progression. And as you’ll see below, machines also start with the simplest shapes – geometric patterns.

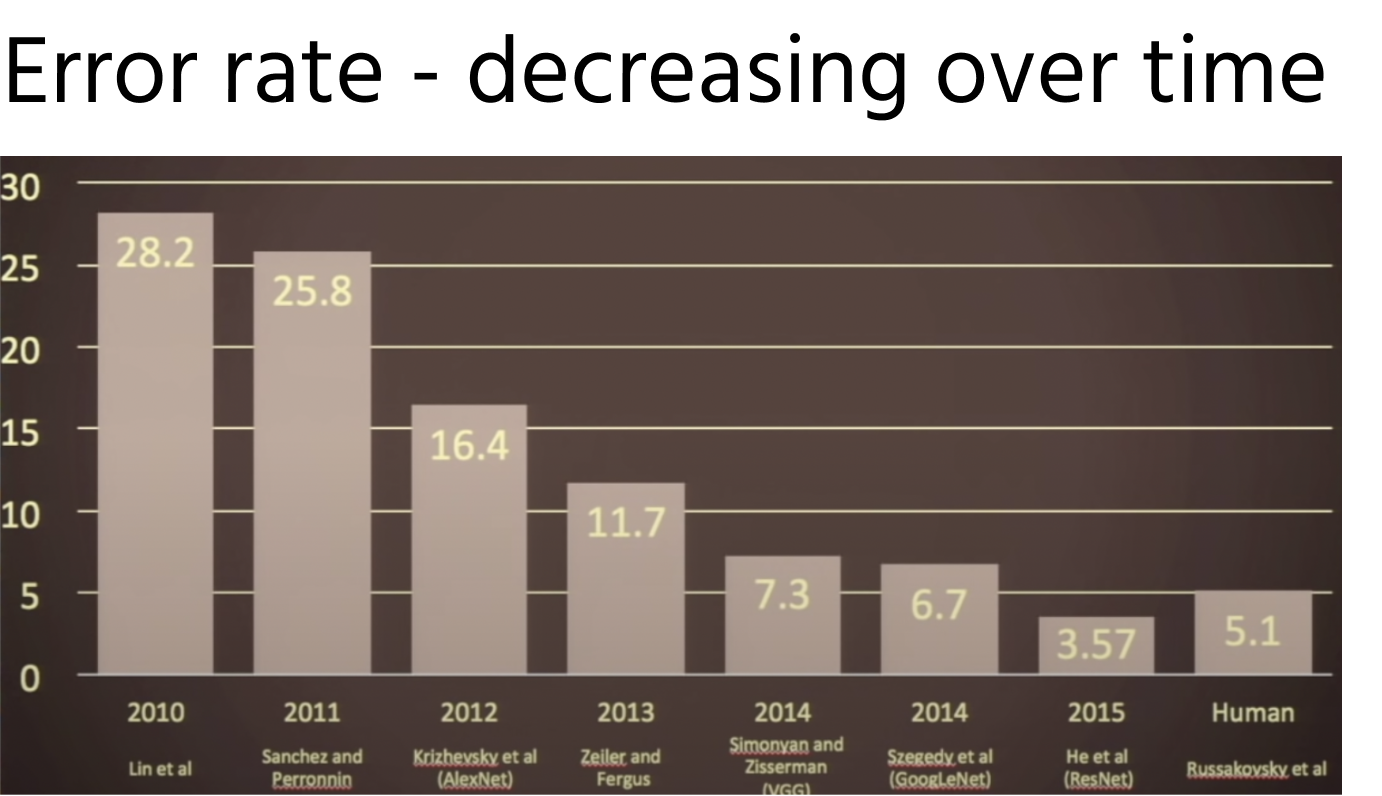

The technology of machine learning has also evolved from recognizing simple geometric shapes to more detail. Today, machines can understand real-world detail almost as well as humans.

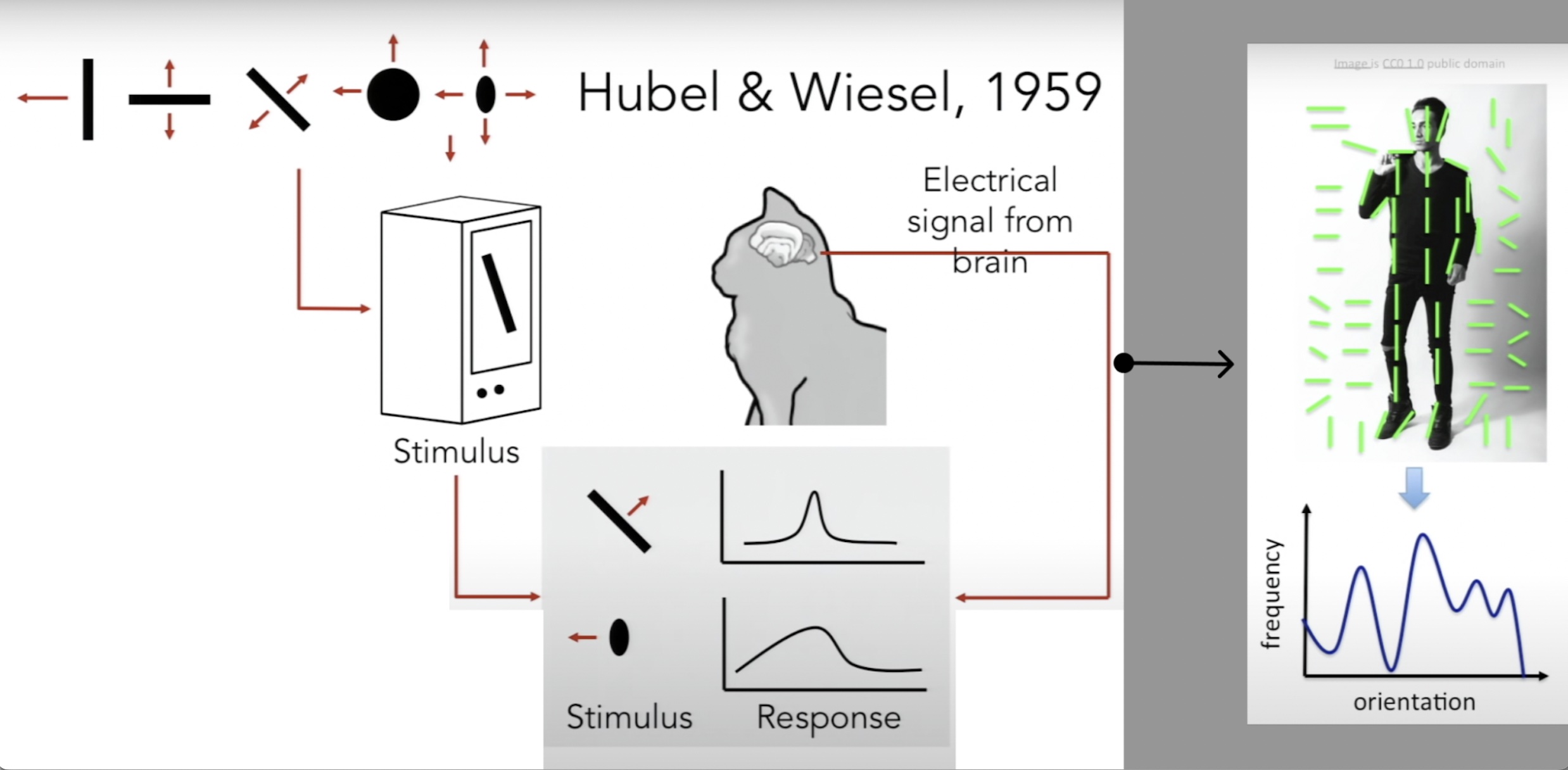

To explain how machines recognize images, we’ll only go only back to the 1950s, where neuroscience biologists theorized how brains see images. Brains start by simplifying and abstracting, seeing only variations of lines, such as their sizes, directions, and whether they are straight or curved.

Engineers, inspired by this starting point, implemented the same abstraction process. Line or edge recognition has become the foundation of computer vision:

As you can see, geometry plays a central role. It goes from simple features, like lines, curves, cubes, and spheres, to hundreds or thousands of features that represent depth, texture, material, and eventually everything that can be detected in the real world. It’s no surprise, then, that the math of geometry (for example, cosines and matrices) plays a key role in machine learning.

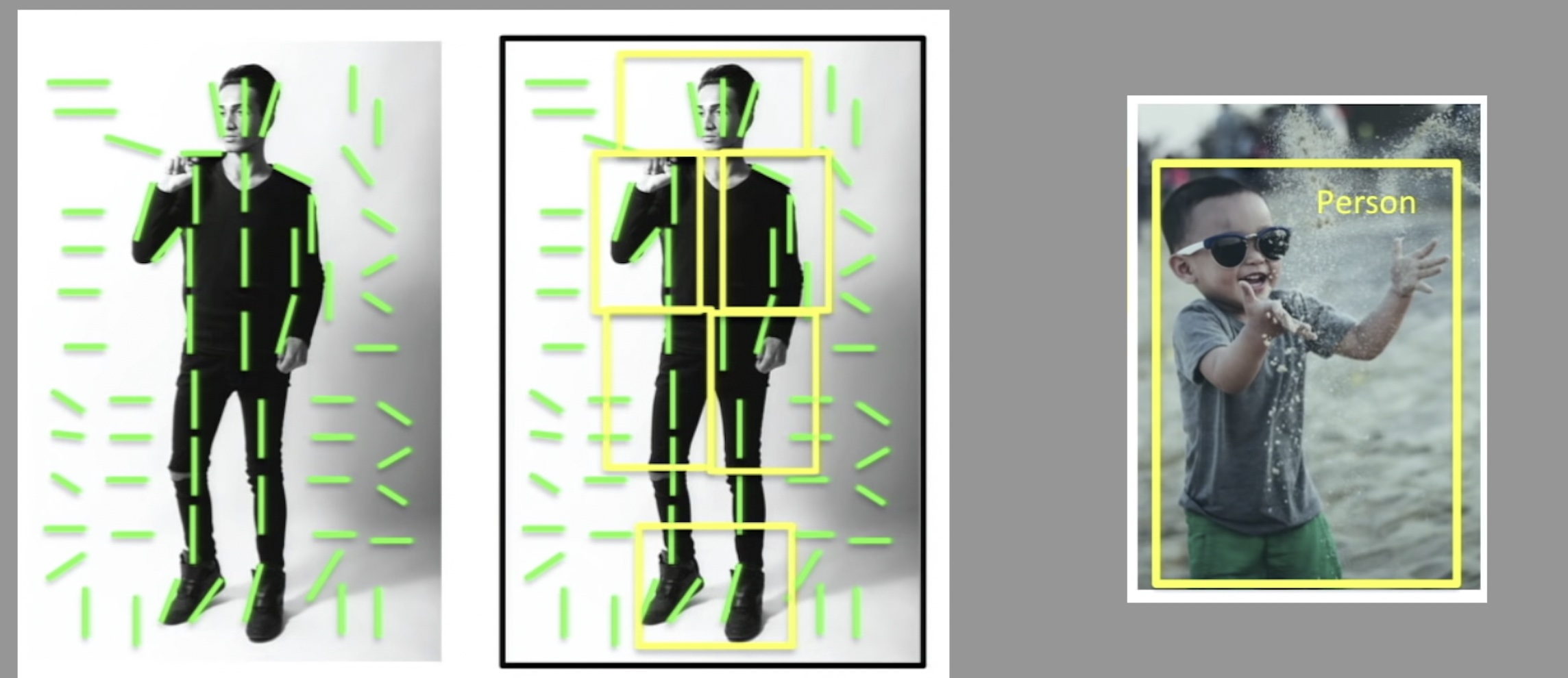

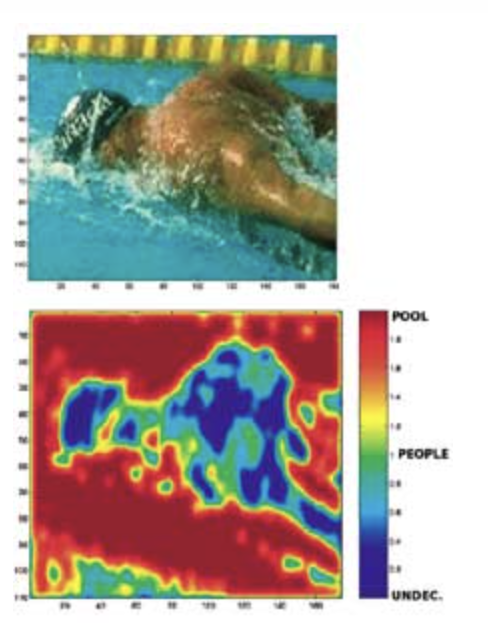

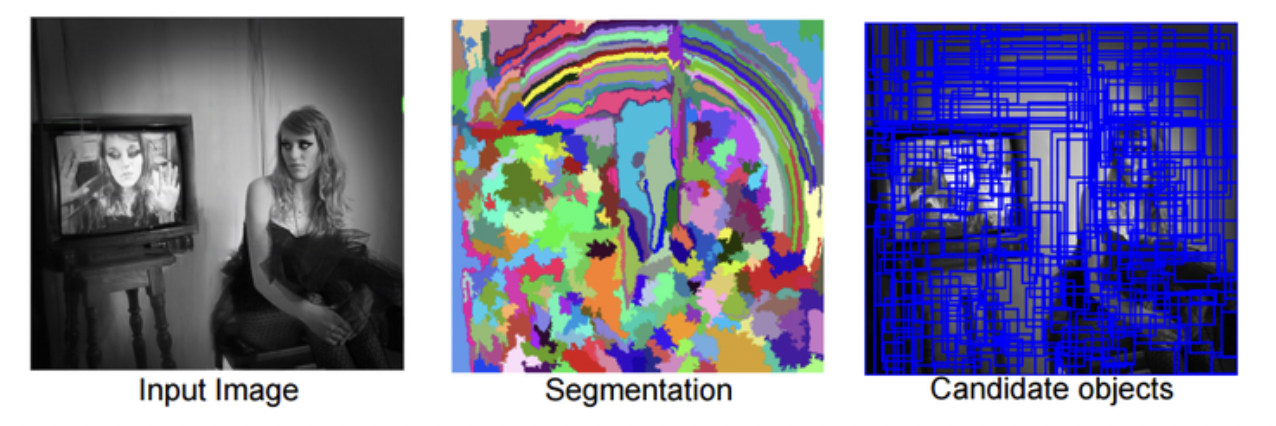

While every advance in machine learning techniques has been critical, the widespread use of image recognition comes from its ability to classify and name objects. Recognizing patterns as well as similarities and differences between images enables grouping (clustering) objects. But this is not enough, there needs to be meaning to the objects: A car needs to distinguish a person from a red light. Thus, the explicit naming and labelled semantic categorization of images is central to image segmentation (classification), which is central to image understanding and image matching, the latter of which is central to our main subject, visual search.

To summarize, the history of machine learning has taken computer vision from the rudimentary to the realistic. Machines may already see better than humans:

But because machines don’t actually understand objects in the same way people do, machine errors are not the same as human error … or are they? Can you spot the cute puppy? How long did it take you?

The importance of data cannot be overstated in machine learning. In the case of image recognition, the massive growth of images (billions every day), has enabled machines to reach its maturity as evidenced in the use cases in part 1 of this article. For two reasons: quality and variety.

The high-definition quality of the images, and smartphone technology which allows anyone to take good quality pictures, has dramatically changed the technology of optic recognition.

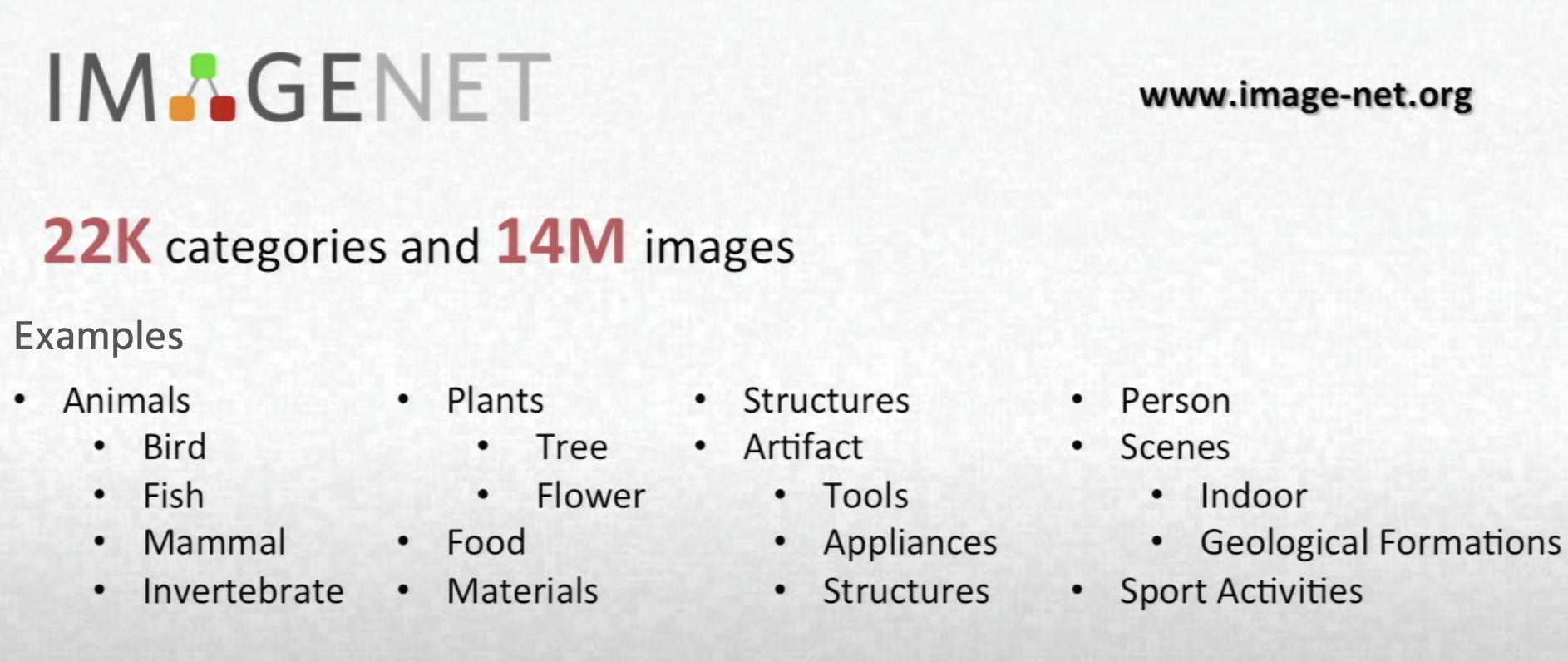

Thanks to this abundance of quality imagery, machine learning engineers have been able to create benchmark image datasets, like ImageNet, which contains over 14 million images of greate variety, all categorized and named:

Why is this freely available dataset so important? Because engineers from all over the world can test their models using this same index of images as a benchmark. The images have been chosen with care. They are of high quality and contain a degree of variety that is essential to machine learning. Quality images facilitate more efficient and accurate learning. And, as you’ll see in the next section, variety ensures the breadth of learning, it enables machines to understand the variations of detail and content that exist in the real world.

To appreciate the importance of variety, it’s necessary to get into the subject of over and under fitting.

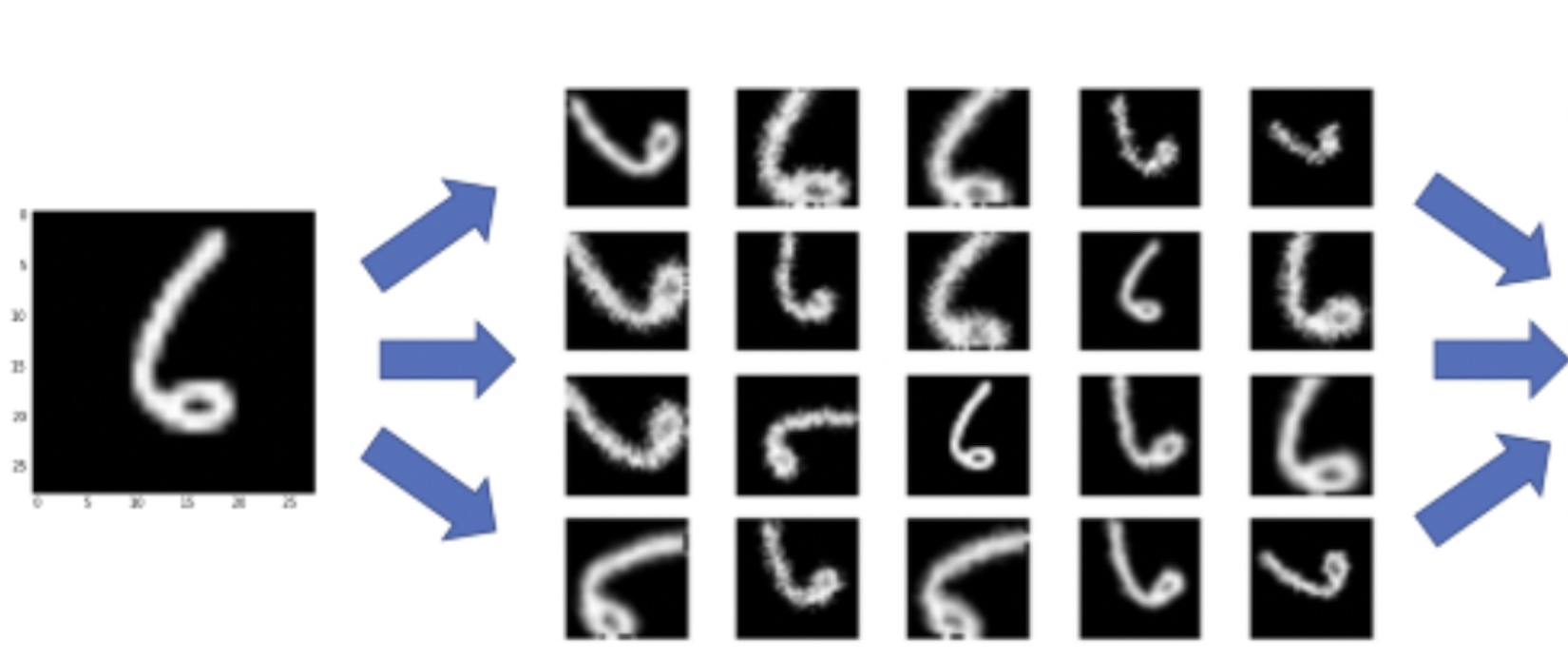

You need to feed a machine a good variety of handwritten 6s before it can learn to detect all handwritten 6s.

A machine learns by the features we feed it. But we need to feed it features that truly make a difference. If you feed it a coat, you need to feed it on the same coat in several different ways: buttoned and unbuttoned, ironed and crumpled. This variety of features is called fitting.

Under fitting

Machines need enough information to make useful distinctions. If we give it a mere silhouette of a tiger, cat, horse, and dog, it will place them all in a single class of animals. Outlines do not contain enough information to distinguish between animals (dog = cat) or species (blue jays = parrots). Outlines contain only enough information to distinguish two- and four-legged animals from birds and fish.

Proper fitting

The more details (features) we give a computer, the more it will be able to detect similarities and differences. Adding features that elicit ideas of weight, strength, shapes of noses, eyes, and many kinds of skin and furs – such detail can help distinguish cats from dogs, goats from sheep, and blue jays from parrots. It’s also good to add additional features, such as poses, perspectives, and expressions, to give meaning to sitting vs standing dogs, or sad vs angry dogs.

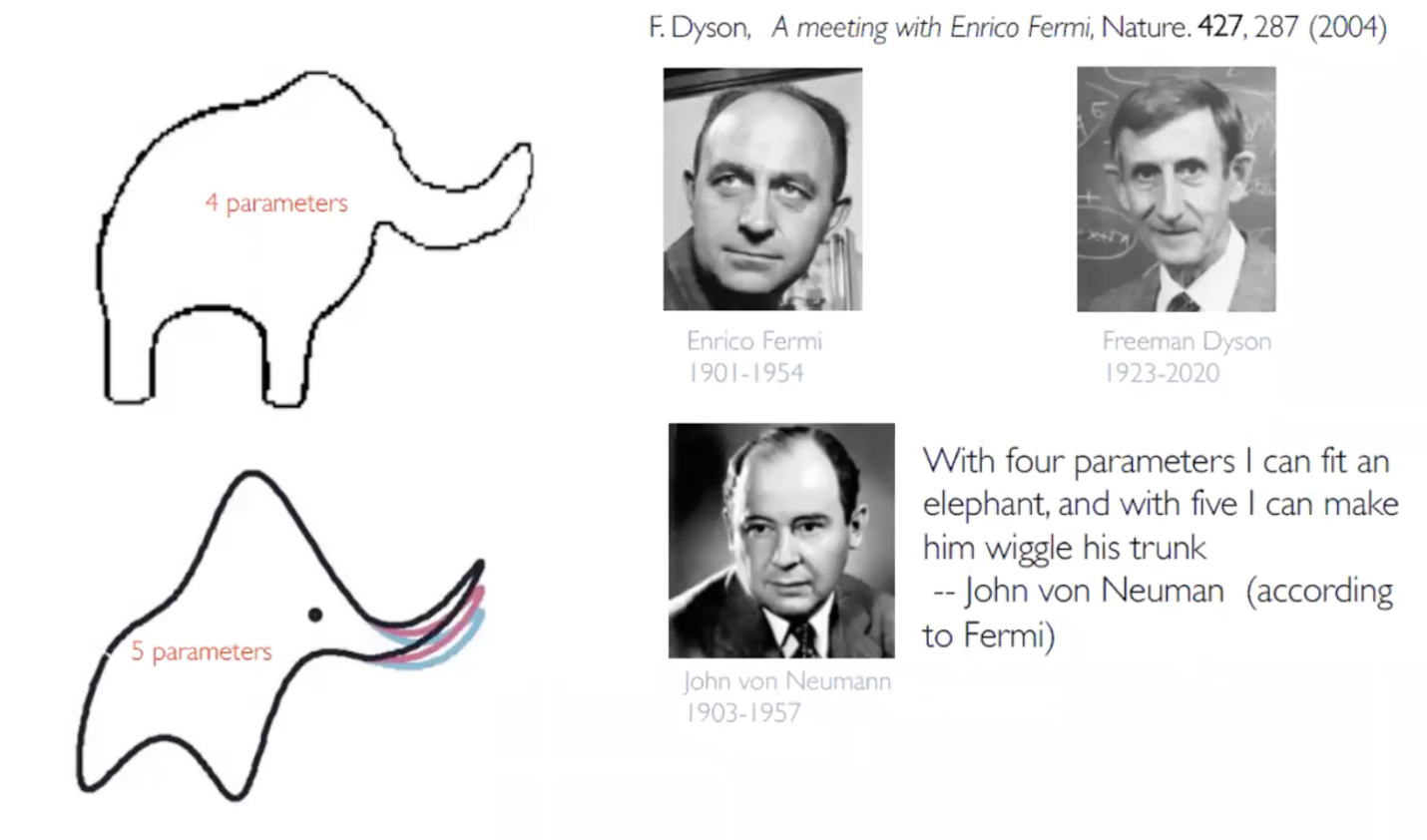

Overfitting – Making everything significant

However, there is such a thing as giving too many details. There’s a pro If we feed millions of photos into a computer, and ask the computer to consider every detail as important, this can distort our classification or simply over-characterize our animals. Every blue jay will be in a class by itself. We will lose every distinction necessary for the computer to actually learn useful information.

You can resolve overfitting by considering some details as noise. Here are two common examples of noise:

This last-mentioned example, that of a running vs a non-moving dog, brings us to this conclusion: The end goal of the learning process determines the right amount of information and detail you need to achieve that goal. Image recognition relies on a balance between too little and too much information: if movement is important to your application, then your image index must contain all kinds of movements – not only walking and running, but perspectives of runners, going uphill and downhill, different gaits, and so on. It’s not a small task, as you can see, to pick and choose the right images.

So, you want to tailor your model with images that correlate with your applications’ goals. For this blog, the context is visual shopping. Commercial goals will obviously differ from scientific goals: the feature distinction between fluffy and thin-haired dogs might be all that’s needed for a business selling dog coats; but scientists that study dogs will obviously need far more detail to study every species of dog.

Thus, fashion stores will focus on clothes; home furnishings stores on everything related to designing the interior of your home. But in all cases, the principal of the right fit – not under or overfitting – applies.

We’ve come (finally) to the AI technology that makes this all possible.

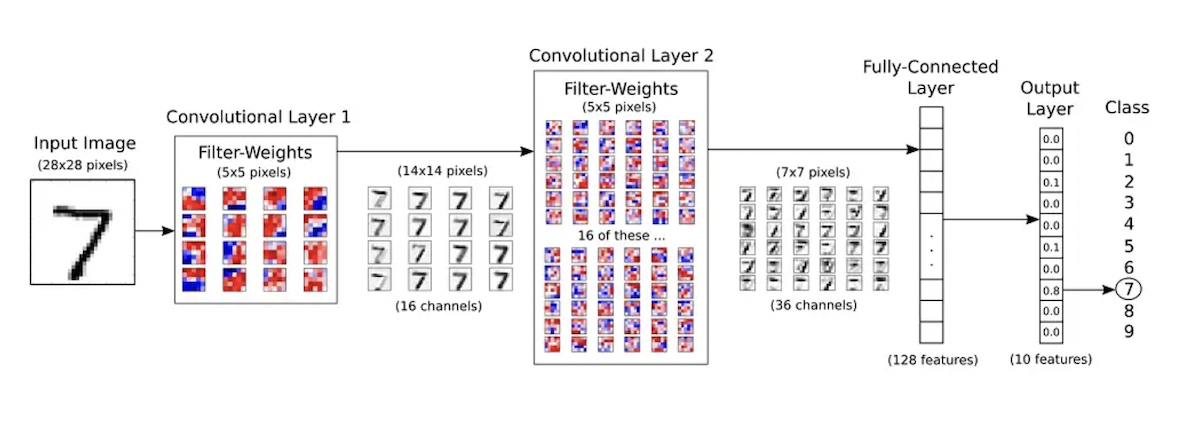

Machines use numbers, statistics, and a deep neural network to see. What this means is that an image’s pixels are converted into numbers, called a vector, which is then fed into a complex software process called a neural network, or more precisely, a convoluted neural network (CNN).

A CNN uses a series of statistical functions called a model that continuously calculates and recalculates the pixelated vector of numbers until the image is accurately recognized and classified by the machine.

The learning part is that as thousands or millions of images are being fed into the network, the model itself is adapting to the images, calibrating itself, and undergoing mini transformations of its own numbers, called weights. The finished model has set all its weights to a near perfect balance that can accurately recognize (nearly all) future unseen images.

Let’s dive in deeper.

As already mentioned, the machine transforms pixels into vectors, which is a list of numbers. The vector represents a certain range of pixels – a grey scale for black and white, or a three-dimensional RGB scale for color – where each number (pixel) in the vector falls within the grey or color scale. For example, the grey-scale model may vary the numbers from 0 to 1 (from no intensity to high intensity). The model can also use a 0-to-1 range to capture the full spectrum of colors.

Once the vector is set up, the learning process begins.

The general idea is to input the vector into the model and transform its numbers as it passes through the network. The network is made up of layers, where each layer interprets the vector numbers as representing a feature. Each layer adds features that add more detail to the image. The number of features is called a dimension. Here’s an image that gives an idea of how adding features (or parameters) helps the machine learn what the image contains.

Let’s say that layer 1 looks at lines or edges (straight or curved). Layer 2 will then put two or more lines together and look for angles. Layer 3 will form squares and circles. And so on – layers 4 to n add more and more features (texture, posture, perspective, color) until the network has recognized enough features to output a recreated image that can be classified correctly.

Of course, a machine sees lines, squares, texture, and objects like noses, faces, and a tv set differently than us:

Our model in this blog relies on supervised learning, which uses labelled images to help classify images and to test the success of the modeling process. To be clear, as a machine processes each image, it compares the output image to a bunch of labels, including the label of the input image. The machine must guess the correct label of every image to consider it 100% successful. No machine guesses every label correctly.

Images can also be classified without the help of labels. This is called unsupervised learning, which follows a similar process from input to output, but instead of using labels to test success, it uses a statistical nearness model to determine if the classification, or clustering, of the images has a high likelihood of accuracy. Check out our recent blog on the differences between supervised and unsupervised learning.

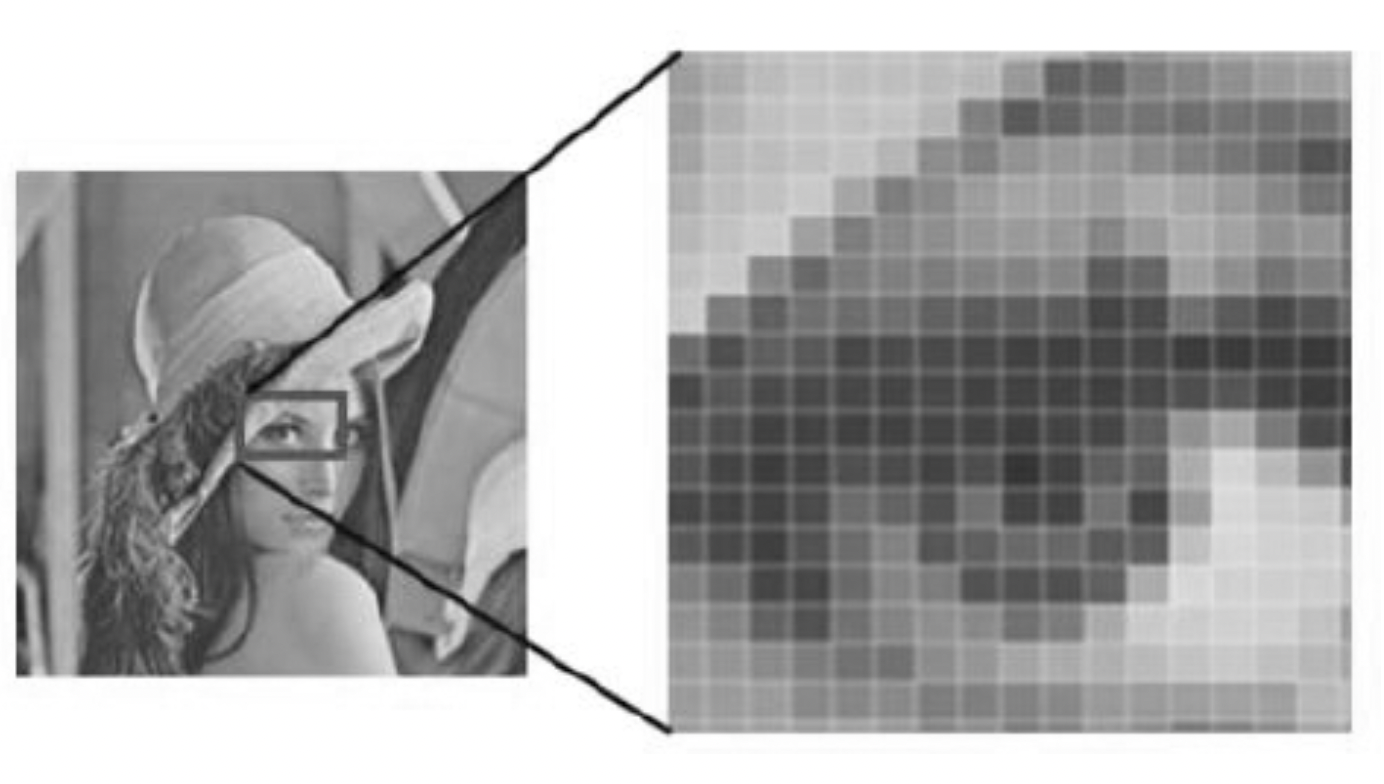

So here’s the process. The following photo, called Lena, is of Swedish model Lena Forsén. Lena has been used since 1973 as a standard test photo in the field of digital imaging process.

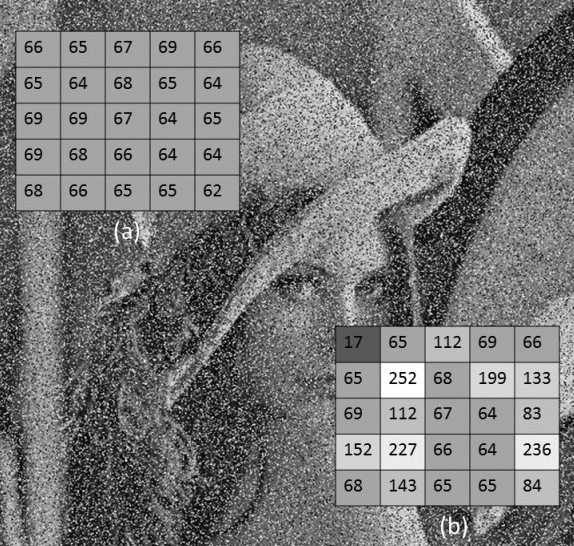

As already mentioned, the image is converted into a vector of pixels. However, the machine does not look at the whole picture. It starts small –splitting up the image into smaller vectors, for example, 5×5 mini-sections. For example, a small image of 10×10 pixels will be split into 25 smaller images.

Splitting the image into small images allows the network to examine smaller vectors of pixels

With that, the network continuously transforms the mini vectors into new vectors.

The numbers in the smaller vectors are transformed into new vectors. These transformations are calculated by statistical functions that multiply numeric weights, thus changing the input vector (a) to an output vector (b).

The input photo, as it move through the first layers, is transformed into showing only its edges:

In the next layers, the network detects more features, like texture.

As the vectors pass through network, the vectors are given more features, until at the end of the process there is a single, final vector that represents the full dimensionality of the image. But we can’t show that because in fact, the output of a neural network does not look like anything we’d understand. It’s worth keeping in mind that vectors are only a series numbers, and because computers know only numbers, the resulting “image” looks nothing like what we’d see or expect.

So how does a machine know that it’s right? It compares the input to the expected output. In the labelled, supervised learning scenario, the network compares the input to a subset of images, all labelled, and if the network guesses with a high likelihood the correct image, then we consider the machine has learned that image.

To see that, take a look at the following handwriting example. The handwritten digit 7 is fed into a network and compared to the (non-handwritten) labels at the end. If the model makes an accurate prediction, then you can say the machine has learned about that image. The machine needs to keep doing that for many images until it achieves a high level of success with nearly all new images.

The is an example of how a convoluted neural network (CNN) models handwriting. It is based on the MNIST dataset. As you can see, at the end, the output layer represents the image. This output vector is compared to the labels that the 7 should match. Here, since the 7th element in the vector has the highest value, the model has accurately predicted what the digit is.

There are many models already trained for image classification. Using a pre-trained model avoids reinventing the wheel. Using pre-trained models provides many benefits:

Companies can download pre-trained models, which comes with image dataset and all its calculated weights. Companies can apply this data to build unique image search solutions. They can then train the model further using their own content. Further training adjusts and tailors the model’s weights to the companies unique data and business use cases.

Visual shopping is a game changer that continues to advance thanks to the creativity and hard work of scientists, engineers, and retailers committed to pushing the boundaries of practical artificial intelligence.

Powered by Algolia Recommend