Add InstantSearch and Autocomplete to your search experience in just 5 minutes

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

The inviting ecommerce website template that balances bright colors with plenty of white space. The stylized fonts for the headers ...

Search and Discovery writer

Imagine an online shopping experience designed to reflect your unique consumer needs and preferences — a digital world shaped completely around ...

Senior Digital Marketing Manager, SEO

Winter is here for those in the northern hemisphere, with thoughts drifting toward cozy blankets and mulled wine. But before ...

Sr. Developer Relations Engineer

What if there were a way to persuade shoppers who find your ecommerce site, ultimately making it to a product ...

Senior Digital Marketing Manager, SEO

This year a bunch of our engineers from our Sydney office attended GopherCon AU at University of Technology, Sydney, in ...

David Howden &

James Kozianski

Second only to personalization, conversational commerce has been a hot topic of conversation (pun intended) amongst retailers for the better ...

Principal, Klein4Retail

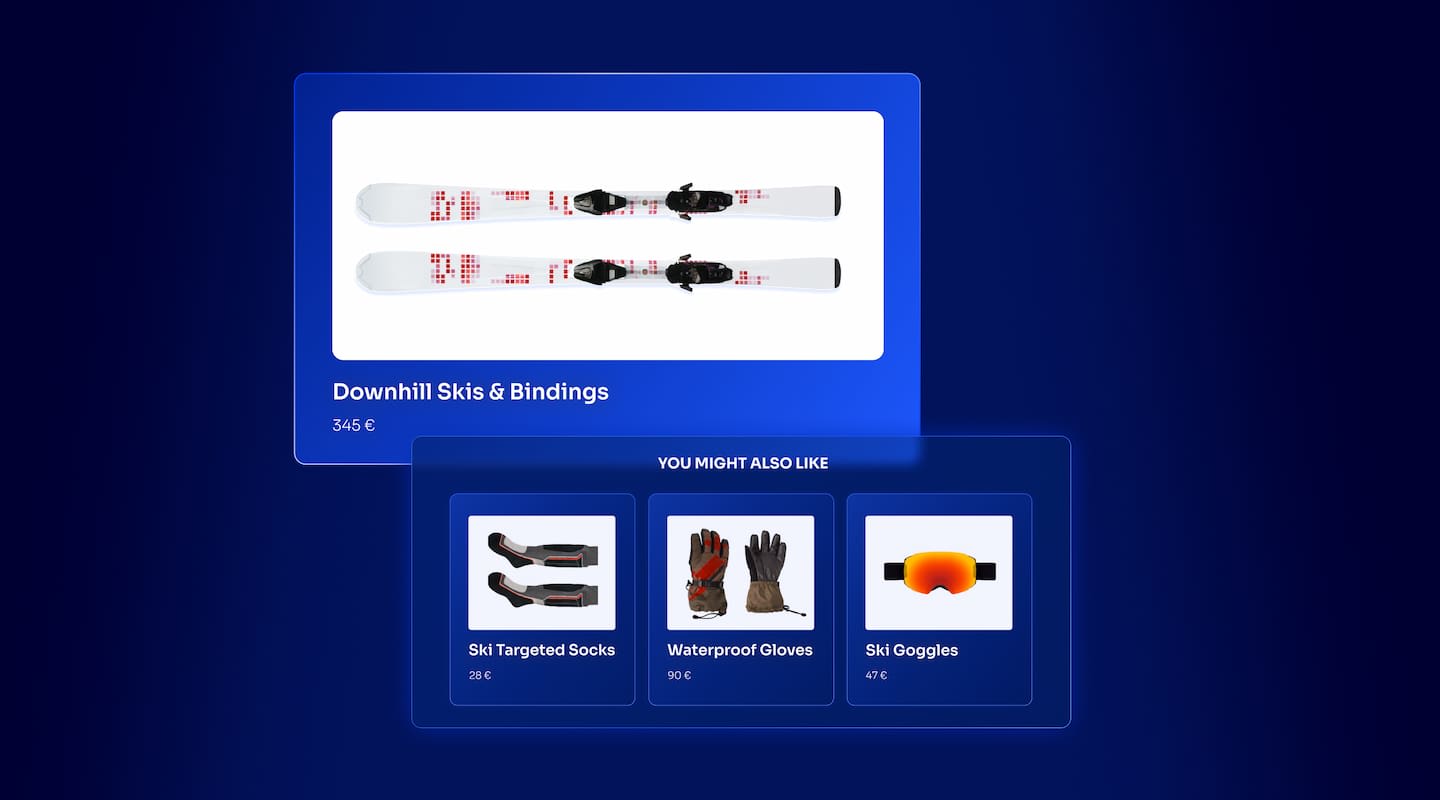

Algolia’s Recommend complements site search and discovery. As customers browse or search your site, dynamic recommendations encourage customers to ...

Frontend Engineer

Winter is coming, along with a bunch of houseguests. You want to replace your battered old sofa — after all, the ...

Search and Discovery writer

Search is a very complex problem Search is a complex problem that is hard to customize to a particular use ...

Co-founder & former CTO at Algolia

2%. That’s the average conversion rate for an online store. Unless you’re performing at Amazon’s promoted products ...

Senior Digital Marketing Manager, SEO

What’s a vector database? And how different is it than a regular-old traditional relational database? If you’re ...

Search and Discovery writer

How do you measure the success of a new feature? How do you test the impact? There are different ways ...

Senior Software Engineer

Algolia's advanced search capabilities pair seamlessly with iOS or Android Apps when using FlutterFlow. App development and search design ...

Sr. Developer Relations Engineer

In the midst of the Black Friday shopping frenzy, Algolia soared to new heights, setting new records and delivering an ...

Chief Executive Officer and Board Member at Algolia

When was your last online shopping trip, and how did it go? For consumers, it’s becoming arguably tougher to ...

Senior Digital Marketing Manager, SEO

Have you put your blood, sweat, and tears into perfecting your online store, only to see your conversion rates stuck ...

Senior Digital Marketing Manager, SEO

“Hello, how can I help you today?” This has to be the most tired, but nevertheless tried-and-true ...

Search and Discovery writer

Most of world’s languages feature a wide range of unique quirks that make search engines stumble when processing queries. In this article, we’ll present features we implemented to solve these issues, and go over their rationale and technical implementations.

Our engine is language agnostic, which means it’s designed to work with every written language. This is no easy feat, considering all the little and big differences languages have, from being based on symbols from different alphabets like Japanese or using many variants of the same stem like in German.

There is no silver-bullet or magical way to handle each of these specificities. Some of the most common approaches rely on statistical models, which are by default close to impossible to debug and iterate upon. Our goal was to design a system that is not only simple to iterate upon, but runs fast and works transparently.

We believe that we’ve found ways to achieve a great relevance in many of the world’s languages. Today, we’re explaining our approach, and how to best use it to configure the relevance of your Algolia index for every language.

A well known low-level step while doing NLP is normalization. The goal of this step is to depend more on the letter than on the way it was typed, to reduce friction between what the user wants and what has been indexed in the first place.

This step is actually composed of two steps:

Those two steps are applied on the text while being indexed, and on the query, when a search is performed.

The normalization into lower case characters is easier to grasp: requiring an exact capitalization of the query is too strict to allow for a pleasant search experience.

Lately, however, we have been questioning our strategy of indiscriminately removing the diacritics (´, ¸, ¨, etc.).

The strategy was designed for the languages we started with — including the French — and it brought a couple of benefits:

However, our bias led to some languages being harmed by this mandatory step of our search engine: mostly Nordic languages (Swedish, Norwegian, etc.) and Turkish. In French, e and é are considered by native speaker as more or less the same letter; for a Norwegian speaker, o and ø are not interchangeable at all.

Interchanging two such letters can create an issue by making the search unable to discern between two completely different words, like in this example in Turkish: cam → glass, çam → pine (pretty annoying if you are focused on selling furniture).

As we already mentioned, this step is today mandatory in Algolia’s search engine and can’t be disabled. We are thinking on improving this to allow a more granular handling of languages for which diacritics play an important role.

Typo tolerance is an important part of a search engine, and one of the earliest features Algolia brought to the table. Its role is really simple: be resilient to input mistakes, or plain misspelling.

The rules for the typo tolerance are as follows:

By default, Algolia accepts 1 typo for words that are at least 4 letters long, and 2 typos for words that are at least 8 letters long. Those thresholds are configurable by the user.

On top of those rules, we added some subtleties, such as the fact that a typo on the first letter of a word is “penalized” double, because it is much less common to mistype the first letter of a word than any other letter.

Those rules are easily applied to languages using Latin alphabets: while some languages tend to have shorter or longer words on average (which could be translated to increasing or decreasing the minimum number of letters before accepting typos), they are all similar in the way typo tolerance can be configured.

Languages using logograms, such as Chinese or Japanese are, however, a whole different story. Because of the way those languages are input, the typos as we know them in Latin alphabet languages don’t really occur.

However, those languages are subject to the presence of alternative logograms for the same word. Those alternatives can exist for two reasons:

In the last couple of decades, while browsing the internet, most of us adopted the habit of transforming natural language questions such as: “What is the best search engine?” to full-text queries we knew would be better interpreted by search engines, such as: “best search engine”. While those requests used to yield better results thanks to the decrease of noise in the query, this is less and less true because of two major factors:

By default, Algolia is requiring all the words in the query to be present in a record for it to be considered a match. While it is possible to remove words when no results are found, all the words will still be considered equal, and “the” won’t be considered as less important than “search”.

To handle this case, we introduced pretty early a removeStopWords setting that removes all the “unnecessary” words from the query string, and eventually keeps only “best search engine”.

To accomplish this, we are using a really simple dictionary-based approach. We parsed several sources (Wiktionary and ranks.nl) in order to constitute a list of words commonly used as stop words, not only in English, but in ~50 languages available.

The first implementation of the setting was suffering from a major flaw: it was a single boolean value, and one could only activate this feature for all languages simultaneously or not at all. A lot of corner cases were observed: like “thé” (the French word for “tea”) being removed because of “the” being an English stop word, thus transforming a really specialised “magasin de thé” (tea shop) into a regular “Magasin” (shop).

Since then, we changed the setting; the feature can now be set to a list of languages, allowing one to remove stop words from one language at a time.

While this feature doesn’t work well in itself with languages not using spaces (such as Japanese), once coupled with other pre-processing steps (such as word segmentation) it behaves just the way you would expect it to.

While the list of stop words for a given language is not likely to change, we still have a few ideas in mind in order to improve this feature:

In English, words have a rather limited number of alternative forms:

However, even if “car” and “cars” are not so different from each other, there are use cases where you still want to consider them as one and the same (“feet massage” and “foot massage”).

To tackle this issue we created a parameter called ignorePlurals which has over the years undergone several transformations.

The problem can be solved in two different fashions:

1. When indexing words, strip them to their singular form: “feet” → “foot”, “massages” → “massage”. Whenever a query is sent, do the same to each words of the query: “feet massages” → “foot massage”, then search for those radical forms.

2. Index the words the way they are given (“feet” → “feet”), but generate all possible alternatives for all the words in the query: “feet massages” → “(foot|feet) (massage|massages)“ and search for any of these forms.

At Algolia we chose to go for the second method. The reason is simple: typo tolerance. If the word is not indexed exactly as it was given, then you can’t accurately compute the number of typos afterwards: that’s why it is important for us to not alter the words that are indexed.

There are two Natural Language Processing (NLP) techniques to reduce a word to its singular form or find its alternative forms: Stemming and Lemmatization.

We have mixed feeling towards stemming, as a single rule could cause a lot of harm if you consider the following points:

We make heavy use of lemmatization. The only thing that lemmatization efficiency depends on is the accuracy and completeness of the dictionary used. We are continually working on improving our dictionary, primarily based on Wiktionary.

Wiktionary relies heavily on templates such as {en-noun|s} allowing Wiktionary contributors to declare alternative forms when writing a page on a word. For example, {en-noun|s}, would show up like this on the “car” page of Wiktionary:

Noun[edit]

car (plural cars)

By using those templates found inside the Wiktionary data, we are able to build our dictionary of alternative forms. Almost every language has its own template syntax, and many languages have multiple templates to do so. Because implementing everything at once is extremely tedious, we chose to improve our dictionary gradually, mostly upon user feedback about missing words/languages.

We spent a fair amount of time on this, and improved a lot of the features over time:

ignorePlurals evolves a lot: words are constantly added, new types of alternatives are supported (e.g., most recently, Dutch diminutives). The hardest part is actually not adding words, but finding the right balance between improving relevancy and harming it with noise.

CJK stands for Chinese, Japanese, Korean. The acronym is pretty popular and we use it a tad inaccurately by using it to refer to any language that doesn’t use spaces to separate their words (Thai and Lao, for example).

Finding the words in a query is a capital step of a search engine: when you are searching for something, you want documents containing the words of your query, but not necessarily in the same order. You want the engine to be less strict about whether words are consecutive. For example, if you are searching for “chocolate cookies”, you don’t want only results with “chocolate” and “cookies” back to back — you also want “chocolate chip cookies” and “cookies with chocolate chips” to show up in results.

When a language delimits its words with spaces, things are relatively simple; when it doesn’t, you roughly have 3 choices:

1. Take each character separately. At first, from the point of view of someone not familiar with CJK languages, this makes sense, especially with Chinese or Japanese. Those languages heavily use logograms, and we have the false impression that 1 character = 1 word. This, however is not the case, as many notions are expressed as logogram compounds: a concatenation of several logograms that make up for a new word. Besides, as mentioned, Chinese and Japanese are not the only ones not using spaces. That leaves this approach out of question.

2. Require the exact query to be inside the matches, verbatim. This greatly constraints the search, but it at least keeps the meaning of the query. While far from being perfect, it’s an acceptable fallback if the method I will introduce next fails. Example: 寿司とラーメン (sushi and ramen). While you would want to be able to also find ラーメンと寿司 (ramen and sushi), with this method we would constraint the results to documents containing only 寿司とラーメン (sushi and ramen).

3. Find by yourself the words inside the query. The segmentation of a sentence into words is a problem CJK experts have always faced, and several satisfactory techniques have been elaborated to solve the issue. None of them guarantee a 100% accuracy, and like everything else in engineering, it’s only a matter of compromise between speed/cost and accuracy/quality. This is the behavior we implemented inside the engine.

There are many ways to implement the segmentation, ranging from statistical to dictionary-based methods.

We strive for an as-you-type experience, which gives us roughly 100ms (threshold below which our brain doesn’t really register difference in speed of response) to answer a query, network latency included. Because of this requirement, we settled for the fastest method in order to find words inside a query: dictionary-based, as implemented in the ICU library, using the MECAB dictionary enriched with data from Wiktionary.

The idea is simple: starting from the left of the query, we will try to decompose the query with words that are present in our dictionary. In case of ambiguity we are prioritizing the solution that maximizes the length of the words and minimizes the number of characters not belonging to a known word.

As of today, we already have a couple of ideas about what we could do to improve the segmentation of CJK languages. First and foremost, the segmentation is based on a dictionary, which means that any improvement made to the dictionary is sure to improve the overall segmentation:

There is another issue that is in fact really close to the segmentation of CJK characters. In some languages (German, Dutch, Finnish, etc.) several nouns can be concatenated without space to form new words. For example, Baumhaus is a German word composed of Baum (tree), and Haus (house) which designates a “tree house”.

A person searching for “Baumhaus” (tree house) may very well be interested in results containing something like “Haus in einem Baum” (house in a tree), but the default Algolia behavior is to only search for Baumhaus. This issue is what triggered us to spend some time working on handling agglutinated words, or compounds, better.

The very first step that we implemented was a naive splitting of the words in the query. The idea was simple: in the case of “Baumhaus”, if the index was to contain “Baum Haus” consecutively, a match was possible. This feature was more akin to a typo tolerance on spaces than a real split of compound words, as the words still had to be adjacent to one another, which is a limitation we got rid of in the new version.

Since then, we settled for full fledged processing steps that look individually at each word. If the word happens to be a compound word (we are here again relying on static dictionaries to avoid false positives), its various parts are indexed separately.

As of today, this setting can be activated using the decompoundedAttributes setting. The languages we wish to attempt a decompounding for must be specified; three languages are implemented: Finnish, German and Dutch.

We designed this feature to interact with all the other goodies coming with Algolia: synonyms, ignorePlurals, etc.

This setting is still rather new, and we expect to allocate time improving the dictionaries used by the decomposition process in order to increase the accuracy of the feature. While today we don’t have a strong demand for languages other than those implemented, additional languages may show up in the future.

For languages which use spaces to delimit words, there are still subtleties around separating words, for example with acronyms or words separated by hyphens or single quotes. We will review two cases to better illustrate how Algolia handles this.

Acronyms: D.N.A.. There are two ways of approaching this:

1. considering that we have three words: D and N and A

2. considering that we have a single word: DNA

In this case, the second solution makes more sense:

In this case, we consider D.N.A. the same as DNA.

Compounds: off-campus or a.to_json. If we were to apply the rules previously established for acronyms, we would index and search for offcampus and classmethod. In those cases, this is not the ideal behaviour. In the case of compounds, words can be searched individually, or even written without the punctuation in between: both “off campus” and “off-campus” are valid spellings. Transforming “off-campus” into “offcampus” would prevent a search for “off campus” to retrieve results containing “off-campus”.

In that case, “off-campus” will be considered as “off” “campus” and “offcampus”.

In the case of “a.to_json”, we will consider “ato_json” and “to_json”.

This behavior follows two simple rules:

Those rules allow for enough flexibility on the presence or absence of punctuation, and avoiding unnecessary small words like “a” for “a.to_json” that could hurt any prefix search.

Below is a table roughly summarizing the different pre-processing steps we are applying on some of the most popular languages found across the Internet:

While we are always striving to address languages specificities, we know that we are only at the very beginning of the journey, and we already have a long list of language-related issues we want to address further.

Those issues relate to various parts of the processing of a query:

If you have ideas or feedback, we’d love to hear it: comment below or tweet to us @algolia.

Software Engineer

Surface the right things for the right users — without being intrusive.

Powered by Algolia Recommend