Add InstantSearch and Autocomplete to your search experience in just 5 minutes

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

The inviting ecommerce website template that balances bright colors with plenty of white space. The stylized fonts for the headers ...

Search and Discovery writer

Imagine an online shopping experience designed to reflect your unique consumer needs and preferences — a digital world shaped completely around ...

Senior Digital Marketing Manager, SEO

Winter is here for those in the northern hemisphere, with thoughts drifting toward cozy blankets and mulled wine. But before ...

Sr. Developer Relations Engineer

What if there were a way to persuade shoppers who find your ecommerce site, ultimately making it to a product ...

Senior Digital Marketing Manager, SEO

This year a bunch of our engineers from our Sydney office attended GopherCon AU at University of Technology, Sydney, in ...

David Howden &

James Kozianski

Second only to personalization, conversational commerce has been a hot topic of conversation (pun intended) amongst retailers for the better ...

Principal, Klein4Retail

Algolia’s Recommend complements site search and discovery. As customers browse or search your site, dynamic recommendations encourage customers to ...

Frontend Engineer

Winter is coming, along with a bunch of houseguests. You want to replace your battered old sofa — after all, the ...

Search and Discovery writer

Search is a very complex problem Search is a complex problem that is hard to customize to a particular use ...

Co-founder & former CTO at Algolia

2%. That’s the average conversion rate for an online store. Unless you’re performing at Amazon’s promoted products ...

Senior Digital Marketing Manager, SEO

What’s a vector database? And how different is it than a regular-old traditional relational database? If you’re ...

Search and Discovery writer

How do you measure the success of a new feature? How do you test the impact? There are different ways ...

Senior Software Engineer

Algolia's advanced search capabilities pair seamlessly with iOS or Android Apps when using FlutterFlow. App development and search design ...

Sr. Developer Relations Engineer

In the midst of the Black Friday shopping frenzy, Algolia soared to new heights, setting new records and delivering an ...

Chief Executive Officer and Board Member at Algolia

When was your last online shopping trip, and how did it go? For consumers, it’s becoming arguably tougher to ...

Senior Digital Marketing Manager, SEO

Have you put your blood, sweat, and tears into perfecting your online store, only to see your conversion rates stuck ...

Senior Digital Marketing Manager, SEO

“Hello, how can I help you today?” This has to be the most tired, but nevertheless tried-and-true ...

Search and Discovery writer

Algolia Search has always been exceptionally fast. Very few APIs let you call them many times per second and respond faster than your eye can notice, almost as if you weren’t querying a remote service in a data center hundreds or thousands of miles away. When you work on the front end, this is quite freeing. No need to debounce requests or care about loading states; you can rely on the service and focus on crafting experiences that feel instant.

With the introduction of NeuralSearch, we Algolia engineers have been presented with a new set of challenges to maintain unparalleled performance while adding one of the most ambitious features we’ve designed since the first release of Algolia Search.

And this isn’t just about optimizing back-end services and cloud infrastructures. When it comes to speed, every link in the chain counts.

No matter how much you optimize, adding to an existing system is never free. Some changes are subtle or cheap enough to be imperceptible to the end user. And then, there are significant changes that challenge existing designs. These are usually the most interesting, exciting, and innovative features, but they’re also the ones that stack up and compel you to reconsider what you always took for granted.

When talking about NeuralSearch, it’s important to mention we are adding a complete new engine with neural hashes to the existing keyword-based Algolia search engine for more relevant, semantic results.

The introduction of NeuralSearch is challenging because we bring complex LLM on the critical path of the search, thanks to our neural hashes, the performance impact is contained compared to classical vector based solution but it invites us to reconsider early choices, optimize differently, and progressively resort to new designs to solve new problems.

Algolia provides InstantSearch, a family of UI libraries that fully integrate with the Algolia APIs to help customers quickly build their search & discovery interfaces without handling the unappealing work they’re not experts in. These libraries are designed to receive new content from Algolia whenever the end user interacts with the UI, so naturally, they’re sensitive to the performance of the underlying API. If it takes longer than usual to get search results, no amount of front-end optimizations will make them come faster.

Still, we believe that speed is an end-to-end concern. Building a platform of independent yet composable services means every component in the system requires careful consideration of its individual performance while staying mindful of how it eventually connects to other components. Although the front-end libraries can’t make the Algolia API respond faster, they can account for new constraints and seek to balance them out.

With this in mind, we started looking into ways to make up for when Algolia takes longer to respond. How does this affect the UI? Sure, we can’t force search results to come faster, but is it the whole story?

Interactivity is a crucial component of how you design a search UI library. Unlike a blog or a marketing site, a search UI involves a strong, two-way relationship with the user: they type, click, tap, reset, refine, and expect the interface to reflect those changes.

Eventually, we figured that slower network responses didn’t only affect how fast new search results would show up but also how fast user-initiated actions reflected on screen. For example, say you have a search experience for electronics with a filtering UI component that lets you select specific categories you wish to see. When the end user clicked on a category refinement and Algolia was slower than usual, we realized it took a while for the refinement to appear selected.

When clicking a category refinement on a throttled network (Good 2G), the UI remains idle, unresponsive to the user’s interaction until the network request finally settles.

From a user experience perspective, this isn’t very clear. The UI feels stuck; you’re not sure if you clicked, you might click again thinking you didn’t do it right, which can eventually cancel out your initial action… all of which creates friction and depletes user trust from then on. It doesn’t matter that the state is “technically consistent.” What feels natural is to see immediate, incremental updates of parts of the UI based on interactions and eventually reach a consistent state with later updated search results.

This problem surfaced a design limitation: InstantSearch derives and updates its entire state from API responses. In most cases, this works fine because Algolia Search is optimized end-to-end to respond faster than your eye can notice. But when Algolia takes a bit longer to respond because of network latency or internal slowdowns, the clicked refinement doesn’t show up as selected until there’s a response.

With these new constraints in mind, we decided to challenge our initial choices and introduce optimistic UI to the design of InstantSearch.

Optimistic UI is a pattern that increases the speed perception of a user interface. Humans are exceptionally good at noticing delays, especially following an interaction. Research shows that 100ms is the limit for users to feel that a system is reacting instantaneously—any longer, and it breaks the connection between action and reaction.

When browsing Instagram and hitting the “Like” button on a post, you expect instant reactivity. The interface should give you immediate feedback and confirm with a visual or haptic response.

When hitting the “Like” button on Instagram, you get an immediate visual response even though the like action may not have been fully dispatched or processed on the back end.

Technically, the whole thing may take time: the network request needs to go through, the back end needs to process the operation (or even queue it if the service is busy), and, granted that everything goes well, respond to the front end. But if the front end waits for this response to confirm the interaction visually, the user may sense a delay.

Such a situation is where optimistic UI chimes in. Instead of cautiously making the user wait in the rare event that the operation may fail, you adopt a confident attitude that it will eventually succeed and immediately reflect it on the UI. If something goes wrong, you can always revert that state and let the user know.

When doing so, you refocus the experience around the user and decide to trust your system: if you’re building a performant, scalable, resilient service and your logs confirm it, it makes sense to design around positive outcomes.

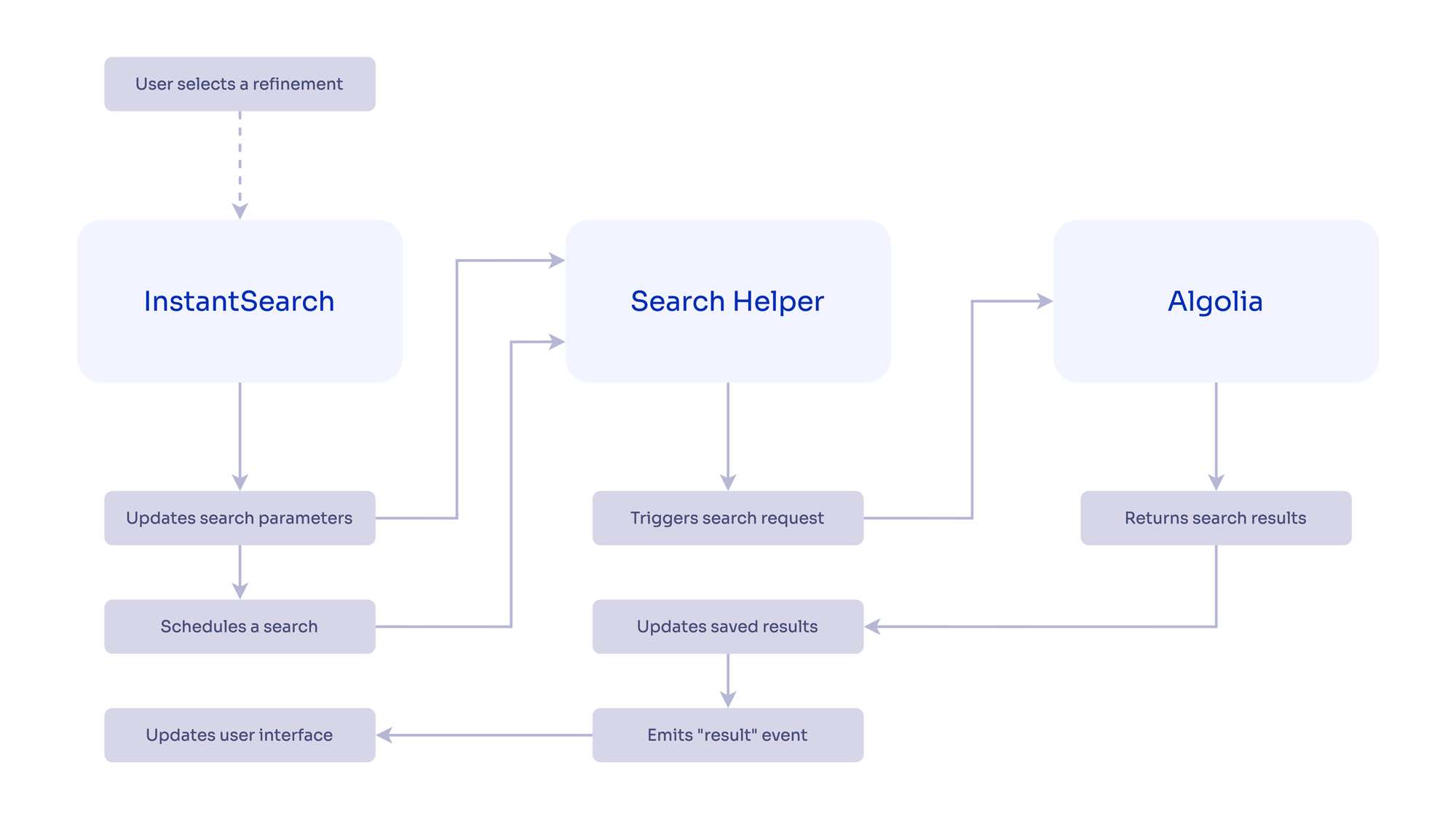

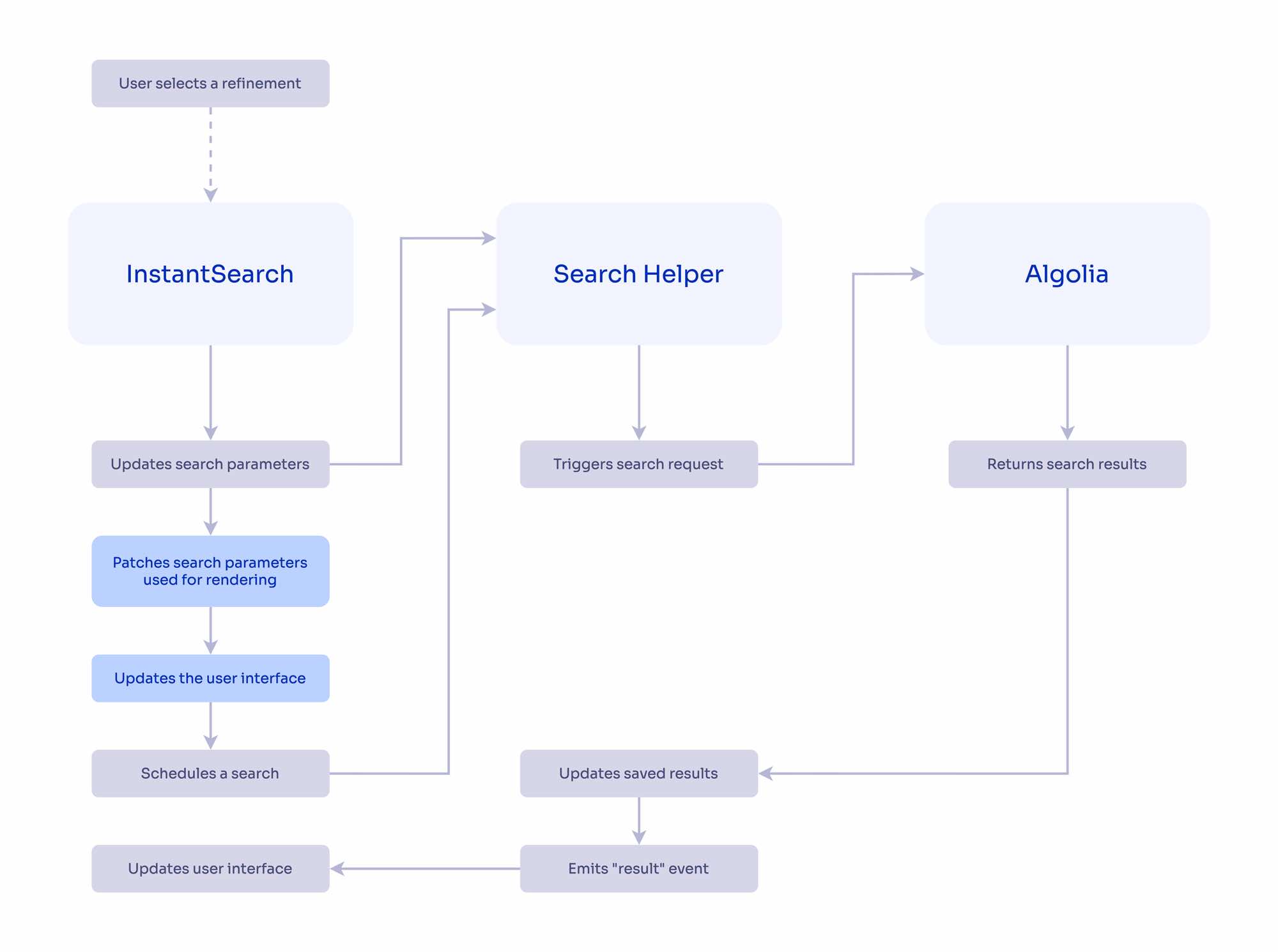

InstantSearch uses a unidirectional data flow: it performs requests to the Algolia Search API, then derives its UI state from the response. The piece in charge of this state logic is the search helper, an internal dependency that performs search requests and keeps track of the state.

When the end user selects a refinement, InstantSearch updates the search parameters with the new facet refinement and schedules a search. When the helper receives a response from Algolia, it emits an event to which InstantSearch reacts by rendering with the helper’s new state—this is when the UI finally updates.

This model conflicts with optimistic UI. It “plays safe” by using API responses as the single source of truth, but it does it at the user’s expense and how they process interaction flows.

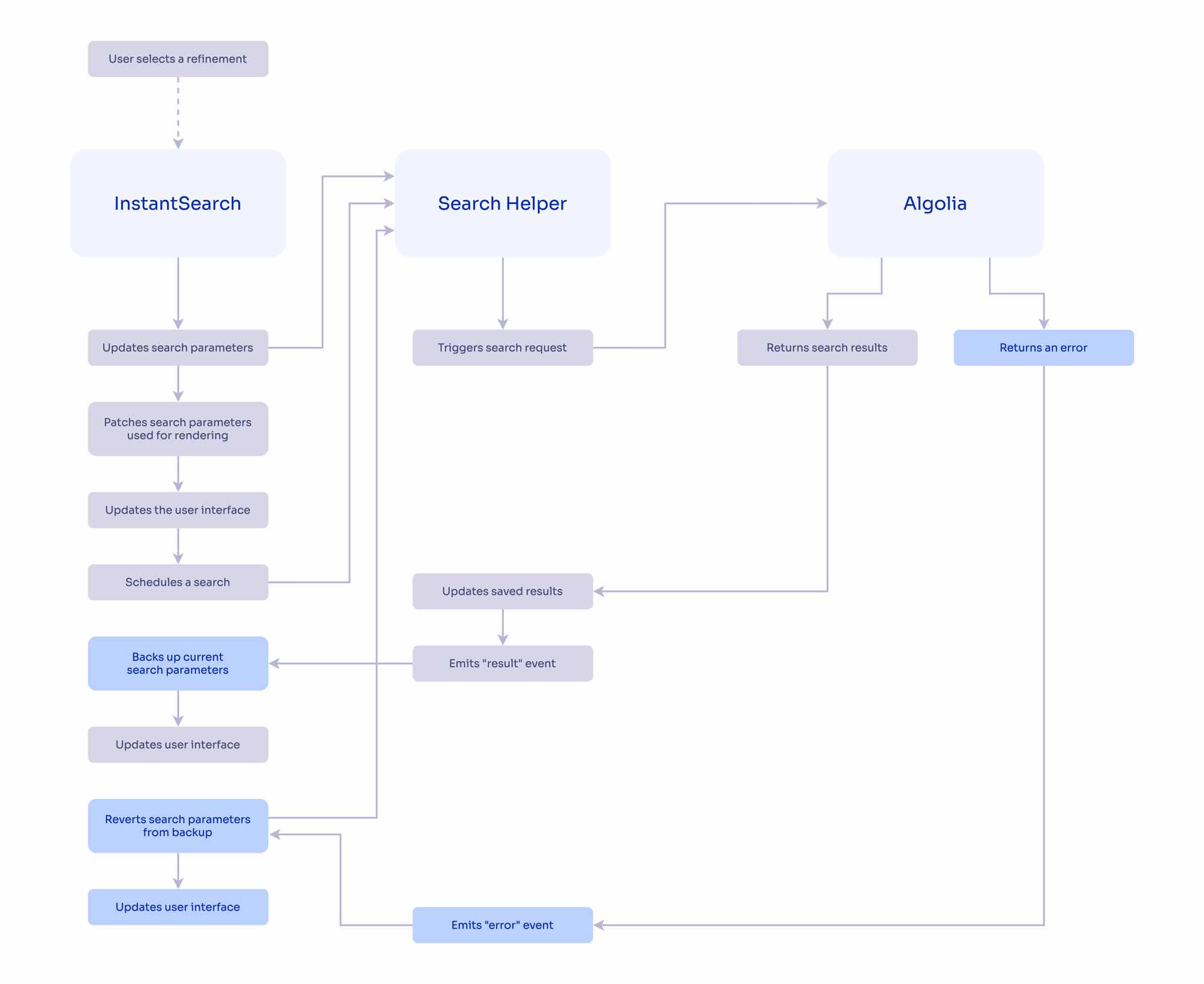

To make InstantSearch optimistic, we needed to perform two changes:

The search helper keeps track of not only one but two pieces of state:

The current search parameters are often “ahead” of the ones in the latest received search results because they represent the next search to perform. Using only the search parameters from the latest search results provides us with the safety of a single source of truth, but induces the network-dependent delay we wanted to eliminate. Although the mismatch eventually solves itself out, it relies on constant new searches and immediate responses that we can’t guarantee.

We decided to keep relying on our single state, but patch its search parameters with the current ones as soon as we schedule a new search from a user interaction. This creates a temporary state where the search parameters and the search results aren’t strictly coherent but better reflect the end user’s mental model.

When clicking a category refinement on a throttled network (Good 2G), the UI immediately reflects it. When Algolia responds, the search results and subcategories update as well.

Although optimistic UI relies on an ideal near future, we must account for when it doesn’t work out. It can happen when the Internet connection fails or in the rare event that the Algolia API is unreachable.

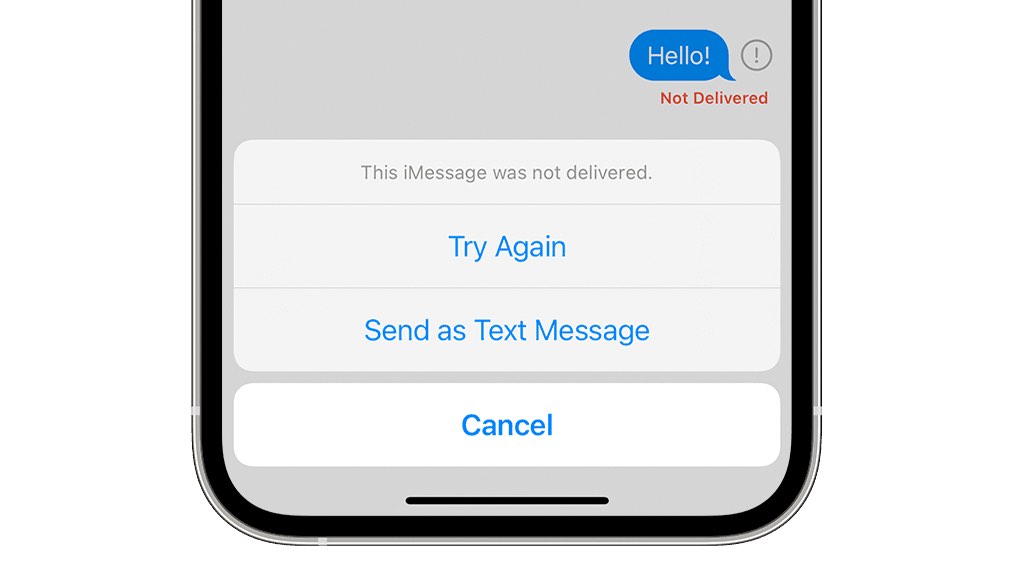

The canonical way to optimistically handle errors is to revert to the original state and possibly let the user know something went wrong. A typical example is the iOS Messages app: when you send a text, the app optimistically adds it to the conversation flow (and shows a progress bar to reflect the send status), but whenever the message doesn’t go through, it reverts resiliently by displaying a warning icon near it that you can click to try again.

In the case of InstantSearch, when the end user selects a refinement that we immediately reflect, but the associated search results never come, we just want to unselect the refinement to reflect the actual state.

To do so, we decided to save the latest “correct” search parameters received from a successful API response as a backup for the next ones. Whenever an error occurs, we can change the helper state back to align with our results.

When considering performance within platforms like Algolia, the big picture is what matters. Speed is an end-to-end concern, where every component in the system needs careful consideration as a unit but also as a part of a whole.

Optimistic UI is a small change with a tangible impact in our quest to design and grow the most reliable, UX-focused search & discovery platform.

Powered by Algolia Recommend