Add InstantSearch and Autocomplete to your search experience in just 5 minutes

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

The inviting ecommerce website template that balances bright colors with plenty of white space. The stylized fonts for the headers ...

Search and Discovery writer

Imagine an online shopping experience designed to reflect your unique consumer needs and preferences — a digital world shaped completely around ...

Senior Digital Marketing Manager, SEO

Winter is here for those in the northern hemisphere, with thoughts drifting toward cozy blankets and mulled wine. But before ...

Sr. Developer Relations Engineer

What if there were a way to persuade shoppers who find your ecommerce site, ultimately making it to a product ...

Senior Digital Marketing Manager, SEO

This year a bunch of our engineers from our Sydney office attended GopherCon AU at University of Technology, Sydney, in ...

David Howden &

James Kozianski

Second only to personalization, conversational commerce has been a hot topic of conversation (pun intended) amongst retailers for the better ...

Principal, Klein4Retail

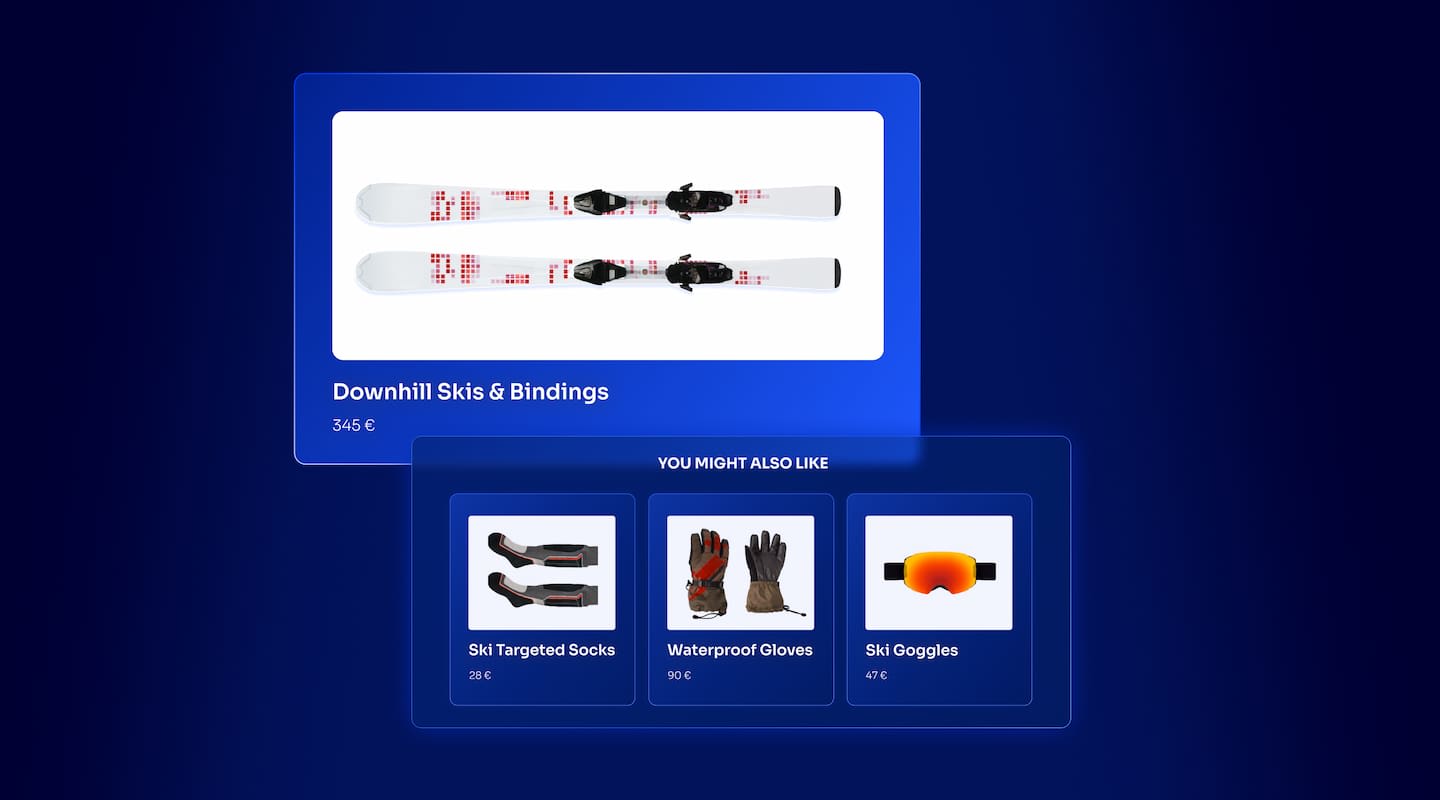

Algolia’s Recommend complements site search and discovery. As customers browse or search your site, dynamic recommendations encourage customers to ...

Frontend Engineer

Winter is coming, along with a bunch of houseguests. You want to replace your battered old sofa — after all, the ...

Search and Discovery writer

Search is a very complex problem Search is a complex problem that is hard to customize to a particular use ...

Co-founder & former CTO at Algolia

2%. That’s the average conversion rate for an online store. Unless you’re performing at Amazon’s promoted products ...

Senior Digital Marketing Manager, SEO

What’s a vector database? And how different is it than a regular-old traditional relational database? If you’re ...

Search and Discovery writer

How do you measure the success of a new feature? How do you test the impact? There are different ways ...

Senior Software Engineer

Algolia's advanced search capabilities pair seamlessly with iOS or Android Apps when using FlutterFlow. App development and search design ...

Sr. Developer Relations Engineer

In the midst of the Black Friday shopping frenzy, Algolia soared to new heights, setting new records and delivering an ...

Chief Executive Officer and Board Member at Algolia

When was your last online shopping trip, and how did it go? For consumers, it’s becoming arguably tougher to ...

Senior Digital Marketing Manager, SEO

Have you put your blood, sweat, and tears into perfecting your online store, only to see your conversion rates stuck ...

Senior Digital Marketing Manager, SEO

“Hello, how can I help you today?” This has to be the most tired, but nevertheless tried-and-true ...

Search and Discovery writer

Just as with a school kid who’s left unsupervised when their teacher steps outside to deal with a distraction, unsupervised learning in a machine-learning scenario can have its drawbacks. For instance, consider search technology. With no data scientist overseeing the generative process, Ms. or Mr. Machine could get creative in the sources it deems accurate, stringing together unrelated information to present search results that don’t quite compute — that is, they aren’t necessarily true or accurate.

In our era of Big Data, supervised learning has inherent value. It seems safer. When a kid isn’t left alone to let who-knows-what ensue, there aren’t usually issues.

But when it comes to AI-powered search, among other applications, unsupervised learning can’t simply be written off as too risky. For one thing, it offers way too many major benefits. In terms of data science, one huge advantage is the removal of human bias. Instead of having a researcher predefine classification groups, all on its own, the independently minded machine puts forth a number of clusters based on empirical proof.

So an unsupervised data mining system that can detect many kinds of data groupings based on similarities it can detect — for instance, as applied to customer segmentation or image segmentation — is undoubtedly a powerful tool.

Unsupervised learning utilizes clustering: grouping data points in scatter plots in classes that share similar characteristics. Instead of predefined categories, unlabeled data is classified (clustered) by features.

When doing clustering segmentation with words, things can get complex because some words can be placed in a variety of clusters. For example, a word like “moving” might be placed in multiple grammatical clusters (because it’s a verb and an –ing word), as well as clusters related to driving a car and relocation.

Fortunately, the various problems arising from establishing word meaning in machine learning can be summarily solved. And that’s where the k-means clustering algorithm comes in handy.

The variable k, referring to the number of centroids you need in the dataset, is predefined. It represents the number of groups or categories. The data is divided into k clusters (classes).

The “means” in k-means refers to the averaging of data related to the location of a center point, or centroid. A centroid is the (real or imaginary) center of the cluster.

K-means looks for a fixed number (k) of clusters in a dataset.

With k-means, the distance between each point in the dataset and each initial centroid is calculated. According to one study, “Distance measure plays a very important rule [in] the performance of this algorithm…. Based on the values found, points are assigned to the centroid with minimum distance. Hence, this distance calculation plays [a] vital role in the clustering algorithm.”

The goal of using the k-means algorithm is to minimize the sum of distances between the data points and their corresponding clusters, grouping similar data points together and thereby being able to see underlying patterns.

Each dataset can belong to only one group of similar properties, which pinpoints the location of the cluster center of mass (the centroid, a collection of feature values).

The k-means clustering algorithm starts by randomly choosing data points as proposed cluster centroids, the beginner points for the initial clusters. A k number of centroids is identified.

The algorithm assigns each data point to its nearest center point. Every point is allocated to the nearest cluster by reducing the in-cluster sum of squares, while simultaneously ensuring that the centroids stay as compact as possible. The points near the center create the cluster.

The algorithm uses an iterative (repeating) approach to pinpoint the best value for each centroid, recalculating new centroids until it ultimately comes up with workable final cluster assignments. When the initial centroids are estimated, these estimates are pinpointed by repeatedly assigning examples to their closest centroids and, based on the new assignments, recomputing the centroids.

With this step-1 activity complete, a new data point is assigned for each cluster. The process is repeated until convergence is reached, with the recalculated centroids matching the initial centroids. The centroid values no longer change and the defined number of iterations has been reached. If the convergence criterion is not attained, there is still a limit on the maximum number of iterations (between 1 and 999). Either way, the algorithm has a trigger to stop creating and optimizing clusters.

With k-means, to determine the number of clusters in a data set, data scientists often apply the aptly named elbow method. Based on where the curve of the plotted data shows an “elbow” or starts to straighten out after heading up, they can see where returns will diminish. Based on that, they can determine the optimal number of clusters to use.

With k-means, there’s another clustering method as well. You can alternatively choose the number of clusters by analyzing the silhouette plot, which shows how close each point in a cluster is to points in other clusters.

The k-means clustering algorithm is employed extensively to analyze data clusters in applications ranging from search to business analysis.

Among the various unsupervised machine-learning algorithms available, what makes k-means stand out?

In a word, speed. Whether there’s not much information or there’s a very large dataset, k-means excels at quickly dividing data into clusters. It’s also flexible, and it’s known to work well immediately. This is an expedient way to figure out the categories of groups in an unlabeled dataset without training a machine.

The advantage of this approach is also that the human bias is taken out of the equation. Instead of having a researcher create classification groups, the machine creates its own clusters based on empirical proof, as opposed to people’s assumptions.

The downsides? A few considerations:

Hope you’ve enjoyed this quick introduction to k-means clustering. If you want to learn more:

Looking for potent optimization of your website? Algolia utilizes k-means clustering among other algorithms to return fast, accurate AI-powered search results and recommendations for our clients’ sites and apps.

See how our groundbreaking NeuralSearch technology can improve the search and discovery experience for your users, plus potentially boost your site metrics up along with your customer satisfaction levels. Contact us for all the details!

Powered by Algolia Recommend